1.

Introduction ^

One of the first challenges to come up is whether the automated vehicle, besides trial and error testing, will be instructed with the basic traffic rules as laid down in the Geneva or Vienna Conventions. Human drivers are supposed to have knowledge of these rules, like driving on the right side of the road, even if that means driving on the left side in some cases, and stopping when a traffic lights goes red, after having turned yellow for some time. Nothing special to a human driver, but how will the automated vehicle know these rules and how to apply them? How does an automated vehicle comply with the traffic rules in order to make it a safe road-user?

In this legal perspective it becomes interesting to see what OEMs are actually doing to prepare their vehicles for an independent life as road user. We know they are driving millions of test miles with their vehicles, and rightly so, that is what all OEMs do before they launch a new model. But this time they not only test the car’s hardware and some supporting software, but they also have to test the active driving behaviour of the vehicle as a road user in many different traffic situations. To support this, they use, for example, data on traffic accidents in order to «teach» the car how to avoid collisions. They are in fact training the car, i.e. its (deep) learning algorithm, by offering it a large training set of features of situations, trusting that this will enable the car to learn and eventually become a sufficiently adequate «driver». But will that be enough? What are OEMs doing to make sure that collisions are avoided? As far as the statistics on accidents are concerned: they may be useful in mixed traffic, along with human drivers, but how likely is it that automated cars will cause the same kind of accidents as human drivers? Automated vehicles are not easily distracted, are not checking their email, but they may have difficulty checking out situations on the road that human drivers overlook in a split second. And what if an accident happens? Wouldn’t we want the car to be able to explain which decisions it made and for what reasons, if only to be able to determine responsibility for the accident? So it really seems worthwhile to look more in depth into the traffic education of the automated vehicle. The responsibility for the accident with an autonomous vehicle has at least two sides. The first one is the tort law liability. When the autonomous vehicle causes an accident while not obeying the traffic rules, the controller of the vehicle will be liable. It will have to pay for the damages. And if it happens again it will have to pay again. To avoid this situation, the OEM will have to explain how the accident could happen and how it will make sure that it doesn’t happen again. To achieve that level of control the software of the vehicle must be legislation/rule based and moreover, be absolutely transparent in the development of its driving intelligence. Besides the tort liability, this will be the incentive for the controller to make sure its vehicle will function flawlessly, thus taking on its societal responsibility.

2.

Autonomous driving car software ^

Modern vehicles are already largely software-driven on aspects like motor management, breaking system, suspension and steering. Many cars have ADAS-features like lane departure warning and control, traffic sign recognition as well as speed control and automatic distance control. The self-driving road user capabilities of the vehicle are obviously software-driven as well, but the possibilities for the driver to take back control over the car will be quite limited. As a consequence of the car taking over driving from humans, the vehicle does not only need a type approval and an admission for the vehicle’s software, it will also need a drivers licence.

The second part of the development of driving skills with regards to automated vehicles will be more challenging. It is hard to imagine that road authorities would allow automated vehicles on the road without serious insight in the driving skills of the vehicle. A half hour practical exam will give some insight in the capabilities of the vehicle, but it will probably not show the depth of the driving capabilities present, and the algorithms lying underneath. On top of that, just like the human driver, the self-driving vehicle may be learning every day on the road from the traffic situations it encounters. This learning process must be controlled. Moreover, it will bring up a more fundamental artificial intelligence question: What has the machine learned and what will the machine learn in the future, i.e. how will it interpret situations and transform them into future behaviour?2 This is where the concept of «meaningful human control»3 comes in. How can human control of a vehicle that is self-driving and using machine learning algorithms be maintained?

3.

A future scenario of autonomous driving vehicles ^

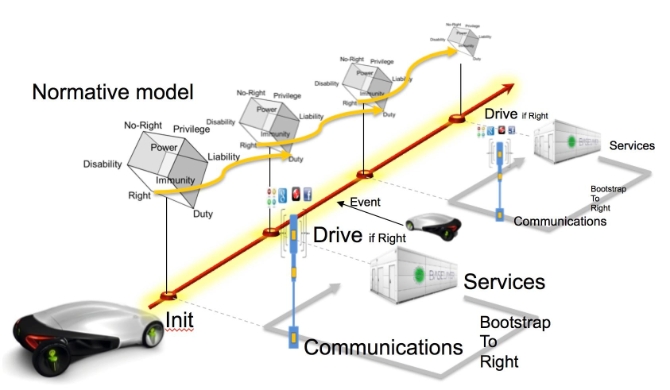

In Fig 1 we show a future scenario of autonomous driving vehicles. In this scenario we distinguish the minimally required two to three phases of autonomous driving, neglecting potential accidents and dispute settlements after such incident. In such a «happy flow scenario», the first phase is the admission test. In addition to regular tests that all cars are subjected to, the software of the autonomous car will be subjected to millions of generated traffic conditions to see if it has the right behaviour and proper implementation of rules. The second phase is the check before the car starts driving. Because of the fact that the software will be regularly updated each autonomous drive could start with a specific internal software admission test. This would include a check if the software and hardware of the car are admitted. If so, then the controller of the vehicle could release it for use on the road. The third phase is the actual driving of the vehicle, where sensors of the vehicle allow the vehicle’s software to build an actual representation of the vehicle’s environment and – crucially – its normative position towards all other participants in traffic that are or may be impacted by the vehicle’s behaviour. In Fig 1. this is indicated by the normative model that is being constantly updated, or better instantiated, when the vehicle participates in traffic. The normative model is abstractly represented as a series of Hohfeldian cubes as these are the basis for our modelling. This paper is too short to explain all details of this normative modelling so we refer to Doesburg/Van Engers (2016) for more details on this modelling approach.

Fig. 1. Requirements check before driving and communication with other traffic participants during driving.

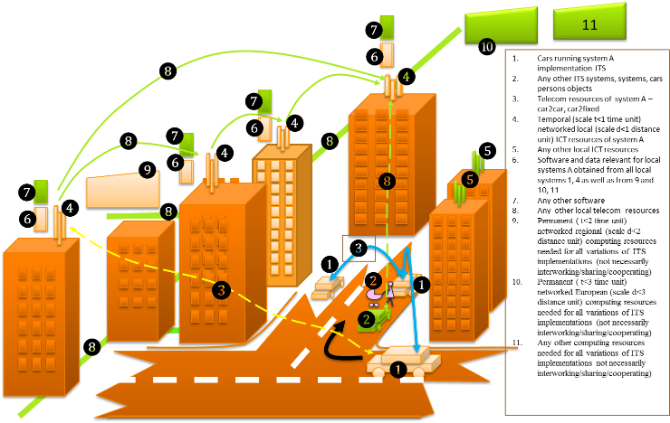

If we would look at this future scenario of autonomous driving vehicles from a legal perspective then we could conceptualize the autonomous driving vehicles as «instrumental agents» controlled by an OEM. The entire traffic situation would thus consist of multiple OEMs whose presence in the situation is manifested through the vehicles that operate as «instrumental agents». Consequently, there will be liability relations between passengers and OEMs, amongst different OEMs and between OEMs and other traffic participants etc. Also other parties, including the road authorities and road controller, may have responsibilities and consequent liabilities. In the ITS systems (see Fig. 2.) currently being developed for cooperative driving for example, various parties play a role in communicating information essential for safe driving to the vehicles.

4.

Allowing for meaningful human control ^

An important challenge that holds for all autonomous systems is how we can allow for meaningful human control. In the context of autonomous driving vehicles this can be phrased as: «How to build ‹fully autonomous› vehicles that do not carry the risk of getting out of meaningful human control?4». Here, a basic set of rules is required to ensure that the software in the vehicle, which may be using various forms of artificial intelligence, including sub-symbolic ones, will not take over beyond human comprehension. Incidents should always be imitable in order to be analysed in such a way that the results are transparent and accountable, in order for human judges to be able to subject them to our human values and norms as included in our legal systems. This means that two modelling actions should be performed:

- Modelling traffic rules as represented in the national legislation into software

- Modelling transparency and accountability in or on top of the deep learning module of the vehicle.

5.

A formal model of traffic regulation ^

Already in the early 90 ’s in the TRACS-project supported by the Dutch Association for Scientific Research into Traffic Safety (SVOW), a formal model of Dutch traffic rules was created and translated into software5. The aim of the project was to construct an intelligent teaching system for traffic law. However, since the SVOW was also concerned with testing new versions of the traffic law, presented to them by the Ministry of Transport and Public Works, looking for anomalies in these new versions of traffic law became the researchers’ core interest. Although the project ran long before artificial intelligence would enter into the vehicle itself, one of the conclusions drawn could be very relevant to the implementation of traffic rule based software into the control module of the self-driving vehicle. By bringing two paragraphs of the law into the system it was uncovered that in the relatively simple test case two of the three positions of road users in a certain situation were misinterpreted by following the rules of the law.

- Testing the rules (law) on suitability for modelling and for application, in this case traffic regulations on autonomous driving vehicles.

- Modelling the relevant rules into control software, in this case traffic regulations in control software of autonomous driving vehicles.

- Testing the control software, in this case the control software of autonomous vehicles, in a virtual environment that covers all possible situations.

- Monitoring the actual behaviour and intervene if necessary.

6.

Actors and responsibilities ^

In order to maintain those legal norms, the processes to which they relate should be sufficiently transparent. This transparency nowadays often lacks in autonomous functions although it is necessary for another legal requirement: accountability. The emission fraud was not noticed at the time the admission tests were performed, partly due to a lack of transparency regarding the software. Would the software have been transparent or even certified to the legal norms than the fraud would not have occurred, or at least would have been immediately noticed. When it comes to software for autonomous vehicles the same demand for transparency arises. Software is not an autonomous entity even if it steers an autonomous vehicle. Ultimately, someone has to be responsible for the consequences, as the emission case showed. If not, OEMs and software developers will not have much incentive to comply with the regulations and autonomous cars may become unpredictable black boxes.

7.

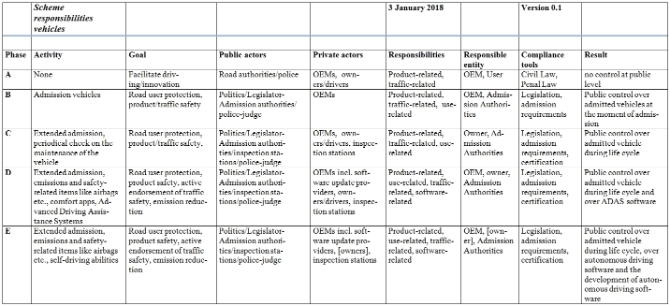

Vehicle admission process ^

8.

Conclusions ^

The first part should be the normative aspect based on the general traffic rules. All relevant traffic rules should be known to the vehicle software and should form the basis of both handling in traffic and of learning. This will not be easy and it could become such a task, that OEMs will not have it done individually, but would like to implement this software as a commodity, provided for by certified IT companies.

But what if something goes wrong and the autonomous car ends up in an accident? What will be the position of the controller of the vehicle and of the victims? Will we, as a society, want to know what happened as precisely as possible, in order to address the liability and to be able to learn from the mistakes that were made? We think and hope this will be the case. And that is where meaningful human control comes in. Software subject to this control will be able to reproduce the decision making in the last moments before the accident, because it will be transparent. All in order to achieve that the information coming from the EDR (Event Data Recorder) that registers the last short period before the crash, will lead to the accountability of the controller as a legal entity responsible for the autonomous vehicle and its road behaviour.

9.

References ^

J. Breuker/N. den Haan, Separating world and regulation knowledge: where is the logic, in: ICAIL ’91 Proceedings of the 3rd international conference on Artificial Intelligence and Law, pp. 92–97, AOEM, 1991.

United Nations Institute for Disarmament Research (UNIDIR), The Weaponization of Increasingly Autonomous Technologies: Considering how Meaningful Human Control might move the discussion forward, 2014, http://www.unidir.ch/files/publications/pdfs/considering-how-meaningful-human-control-might-move-the-discussion-forward-en-615.pdf.

R. van Doesburg/T.M. van Engers, A Formal Method for Interpretation of Sources of Norms, AI and Law Joural, 26-1, 2018 (to be published).

R. van Doesburg/T.M. van Engers, Perspectives on the Formal Representation of the Interpretation of Norms, in: Artificial Intelligence and Applications, 2016, pp. 183–186.

R. van Doesburg/T.M. van Engers, CALCULEMUS: Towards a Formal Language for the Interpretation of normative Systems, in: Artificial Intelligence for Justice Workshop, ECAI 2016.

- 1 SAE level 4 and 5.

- 2 Dei Sio 2016.

- 3 UNIDIR 2014, Horowitz/Scharre 2015.

- 4 Hearing on Self driving cars, March 2016.

- 5 Breuker/Den Haan, 1991.

- 6 Regulations 167/2013/EU and 168/2013/EU.