1.

Introduction ^

In the last twenty years, we have witnessed the birth and fast rapid development of a forensic science known as Digital Forensics, which aims to develop a rigorous methodology for the retrieval, collection and analysis of digital evidences.1 One of the greatest difficulties for experts in this field is to keep up with the speed of technical updates that, as we know, in the digital field is faster than in any other sector. In recent years, indeed, the retrieval and collection of digital data as judicial evidence has been the most challenging issue for Digital Forensics experts, especially due to the pervasive use of two technologies, namely Cloud Computing and AI. On the one hand, the virtualization of resources hinders the validation of the source, the accuracy of the analysis and the integrity of the results, since «evidence can reside everywhere in the world in a virtualization environment».2 On the other, the background of a decision taken by Artificial Intelligence systems lack in transparency, being their behaviour as unpredictable as «black box» outcomes.

Until now, such issues have been addressed improving pre-existing methodologies. «Cloud Forensics» can be defined as «the application of computer forensics principles and procedures in a cloud computing environment»,3 whereas «Explainable Artificial Intelligence» (XAI) aims to develop a suite of techniques that, bringing more transparency in the reasoning process, allow to validate the reconstruction of external events.4 Notwithstanding the promising results currently achieved in this way, it is a widespread opinion that «enabling technologies» require radically new approaches.

The same methodological discussion is taking place with Internet of Things (IoT)5. The term «Internet of Things», which was originally coined in 1999 with specific reference to RFID technologies6 and soon overcame more general expressions such as «ubiquitous computing»,7 «pervasive computing»8 and «ambient intelligence»,9 now is commonly used to designate «a global infrastructure for the information society, enabling advanced services by interconnecting (physical and virtual) things based on existing and evolving interoperable information and communication technologies» according to a definition given by ITU in 2012.10 The marvel that in the past could be just a vision, now has become everyday life. Today millions of different kinds of devices are connected in an extensive infrastructure, exchanging enormous quantities of data, and it is foreseen that in the future such phenomenon will increase exponentially.

Collecting and analysing digital evidences in IoT scenario poses several challenges, data being spread in an undetermined (e.g. in their type, number and location) set of connected devices.11 Indeed, machines could present security vulnerabilities, so being exposed to hijackers; data transmission could be unencrypted, allowing «third party manipulations», and storage units could not grant suitable access to archives. In an IoT environment any incident could cause exponential damages and traces of criminal activities could be very difficult to detect.

We claim that one of the key problems in IoT forensics is information quality. While, as a matter of fact, in the IoT environment the amount of sources of data and the trustworthiness of information obtained are inversely proportional, each step of all the processes concerning digital evidences has be validated in order to be discussed in courts among parties and judges. The fact is that, when it comes to jurisdiction, quantity cannot be opposed to quality, but they need to be harmonised.

In this paper we focus on three aspects of such issue: firstly, we provide a preliminary explanation on the concept of information quality, since «data» and «information» are quantitative concepts; secondly, we offer a short analysis of the practical concerns that can be considered as expression of the theoretical issues emerged in the previous part; after that, we express some methodological observations that can be useful to build a theoretical model that can be represented in a formula. At the end, we express our final evaluations and draw paths for future research.

2.

Theoretical background of «information quality» in IoT forensics ^

The distinction between «data quality» and «information quality» is very debated.12 The first concept – in accordance with ISO 8000-1 definition13 – is commonly stated as a measure of the fitness for the intended use of such data,14 while the second represents a broader range of features, also encompassing the context of the observation.15 In light of such discussion, we prefer to use the approach concerning «information quality» for three main reasons: (1) the extreme complexity of IoT environments, as described above, in which data are just a part of a complex environment, along with devices and processes; (2) the possibility to take into consideration both data and metadata; (3) the possibility to include our contribution in a wider theoretical perspective better known as «Philosophy of Information».16

According to such approach, «information» has three ontological statuses, identified since the seminal studies of Weaver:17 «information as reality», for example the electrical signal, which is transmitted regardless of the message contained; «information about reality», such as the information about natural phenomena, which can be true or false (hence, in philosophical terms, can be said «alethic»);18 «information for reality», which conveys instructions or algorithms to one or many recipients. Each of them has to be considered separately.

Consequently, «information quality» can be studied under three different views.

- Quality in «information as reality» is the most common and emerges, for example, in the traditional problem of reducing noise, distortion or losses in signal transmission theory. In this sense, it measures the affordability of the means implemented to transfer information.

- Quality in «information about reality» is concerned when information is dissimilar to the facts to which it refers. This concept is related to the meaning of information, or «semantics»19. Thus, here quality measures the reliability of the information provided in representing the related events.

- Quality in «information for reality» has to be faced when processes present inconsistencies, loopholes or conflicts. This concept involves further processes of information, for example when it is shared with others. Hence, in this respect it measures the trustworthiness of the agent who receives information or, generally, of those involved in further processes.

In digital forensics, the problem of «information quality» has received special consideration under different perspectives. Here we can deploy the same tripartite analytical model to draw a comprehensive framework.

- The first kind of quality is important, in order to preserve authenticity of the collected information, experts developed the concept of «chain of custody» and achieved a broad consensus on «best practices» which have been codified in technological standards.20

- The second type of quality is concerned when the trustworthiness in the representation of the events has to be verified with other sources of evidences.21

- The third sort of quality is involved in the discussion on the evidences among parties (inquiring authorities, defendants, judges, forensics experts). Here, external variables also can make the difference, such as personal competences of the agents involved, «soft skills» (argumentation skills, trial strategies), cost of analytical tools, available time.

From a theoretical perspective, since in IoT devices are constantly sharing information with each other, «quality of information» is a much complex issue. Indeed, it has to be considered not as a property of a single device, but as a feature of the whole ecosystem in which it is immersed. Specific concerns emerge in each considered aspect.

- «Quality of information as reality»: it is difficult to isolate a single device or crystalize a specific piece of information. The boundaries of relevance are blurred. This aspect is, not without a reason, widely discussed by experts.22

- «Quality of information about reality»: it is problematic to detect a specific source, to trace the chain of interactions, to measure the influence of a single item in shaping the representation of the event occurred. It is commonly true that «correlation is not causation», however IoT is, above all, a matter of correlation. Here is IoT is where «quality of information» really faces uncertainty.23

- «Quality of information for reality»: this is the most difficult aspect of IoT forensics. Under this perspective, the «human factor» plays its part besides the technological variables, as shown in digital forensics.

The observation above outlined can be represented in the following table:

| Ontological statuses of Information | «Quality of Information» level of analysis | «Quality of Information» in digital forensics | «Quality of Information» in IoT forensics |

| Information as reality | Traditional theory of communication | «chain of custody» | Relevance |

| Information about reality | Consistence with represented facts | External validation with other sources of evidence | Uncertainty |

| Information for reality | Logical coherence | Effectiveness | «Human factor» |

Table 1: «Quality of information» tripartite analysis and IoT issues

3.

Practical issues in IoT forensics ^

After having provided a short definition of «quality of information», we intend to underline that the main feature of an IoT network, while performing the given tasks, is the possibility, when necessary, to take advantage exchanging information with other neighbouring elements, regardless the fact that they could belong to different IoT ecosystem, possibly set up for a different purpose. Over the years it became clear that, while it was originally conceived as a sunrise of new opportunities for increasing the efficiency of services – thus to benefit private users and industries - this technology generates a «virtual environment» which contains a huge amount of information which, if needed, can be used as a source of evidence in a forensic scenario. In the following part, we observe that the main task of IoT Forensics, namely the collection of the evidence, is challenging for different causes.

First, the extreme variety of IoT items, with proprietary or undocumented protocols, often without physical interfaces, hinders the direct extraction of evidence from devices.

Second, IoT devices ceaselessly send information to «their own» cloud service providers. So, obtaining evidences from cloud service implicates other challenges: technical ones (cloud forensics) and legal ones (interact with foreign companies in different legal frameworks).

Third, evidences could be manipulated by cloud service providers. Indeed, raw data fed by IoT devices are analysed, parsed and stored in databases and server, yet adding more layers of interpretations and classifications. Those processes can aggregate data, but also deteriorate them, thus weaken the quality of the acquired information.

In order to explain such issues, we provide an example: let us assume that we need to acquire the complete geolocalization history of a specific account from a cloud service provider (i.e. Google Timeline History24).

Let’s suppose that we have the account’s credentials (i.e. username and password): we could use a forensic software (i.e. Oxygen Forensic® Cloud Extractor) to acquire the geolocalization history25. After the acquisition we could explore and analyse the collected data: we would likely find a very clear and detailed set of information, spanning years and neatly organized. The typical forensic software user interface for this type of data consist of a world map we can freely zoom and search, a time filter and coloured pins and lines that represents geolocalization info and probable movements.

This great source of info can lead us to assume that the quality of those data is very high, but after an additional analysis we should find out that actually we don’t know where information come from. We know that these cloud service provider acquire geolocalization data from a great range of source related to that specific account: smartphone’s GPS; EXIF data of images; cellular network radio tower; WiFi known geolocalization; NFC; fitness apps; Uber rides; IP geolocalization; fitbands; smartwatches; navigation apps; reviews of shops or restaurants; public transportation tickets; and many other IoT sources. The timeline can even be manipulated by the user through the cloud service web interface (i.e. in Google Timeline we can easily add a new geolocalization or change or deleted an old record).

If we focus to a specific day we only have the alleged geolocalization history: some points (consisting in longitude, latitude and timestamp) and lines that connect those points to speculate movements, but we are not able to discover where each point comes from. Even if in some metatag there is an information about the source of the info, we can access only the parsed data: we don’t know if the source is reliable or if the data has been manipulated, compromised or not properly interpreted. The original data can’t even be accessible (i.e. If the geolocalization comes from a fitband’s GPS, probably we can’t physically connect to the device; we can acquire the paired smartphone and analyse the Fitband’s app’s databases, but even that isn’t the original data…).

4.

Theoretical model ^

The evaluation of the «Information Quality» in a set of data is not generally a trivial task, as emerged in the previous sections. The various steps of the assessment always hang on different factors, many of whom sometimes cannot be precisely verified, but only estimated, without forgetting the «noise» coming with the data flow, which must be carefully identified and removed. In addition to these basic difficulties, in common for every environment, the quality of information needed in a forensics scenario must undergone to a further filter, represented by the «check for the admissibility», which allows the acquired data to be a part of a trial procedure.

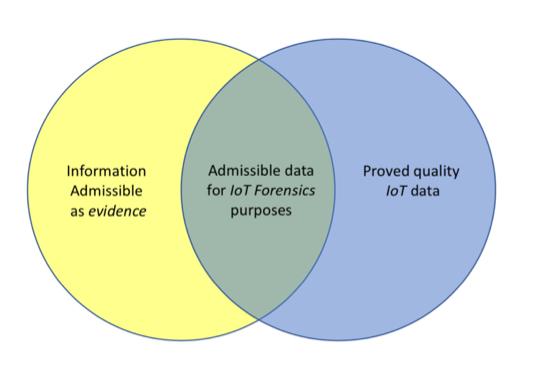

Fig.1 The first approach in the Information Quality assessment for Forensics IoT purposes.26

A first approach for this issue could be based upon the verification of «quality of information» in two different fields, as exposed in Figure 1. Information which will be proved to be, at the same time, admissible as an evidence in a trial (as it has been properly acquired and stored) and reliable as a collection of data, will serve as an input for the forensics analysis. In our opinion, this method is affected by two main risks: wasting time with one of the two controls when the outcome of the other is negative, and rejecting key evidences which, for some reason, are not proven to be reliable as high quality IoT data (e.g. a noisy image which, considered together with other evidences, could have an important meaning). For the above explained reasons, we prefer to adopt another approach, where the two aspects of the extracted information (as rough data, and as forensic evidence) are spread all over the addends of a «sum of proper features».

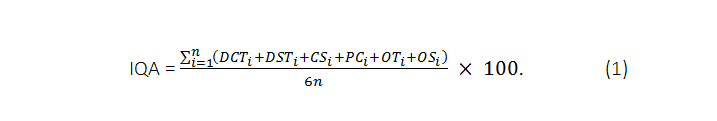

In order to support such method, and to express the result of trustworthiness of a set of n IoT devices that have to be acquired as digital evidences, we formalize how the above mentioned factors must be taken into consideration, introducing a percentage coefficient that we named IQA (Information Quality Assessment) as follows:

Where:

i = i-th device

DTC = device technical status

DST = device security status (Confidentiality, Integrity, Availability, …)

CS = cloud service security status

PC = privacy (GDPR) compliance

OT = observer technological advancement

OS = observer skills

(allowed values: all decimal between 0 = bad and 1 = good)

Giving an interpretation of (1) it’s straightforward, if we observe that a device scoring «1» to every component of the sum implies a 100% of Information Quality. This percentage must be averaged with the one scored by other components of the set, if any.

We underline that, also because IoT Forensics is one of the newer subareas of Digital Forensics,27 very few studies have been carried out with the specific aim of modelling a theoretical approach28 and, of course, a lot of work has still to be done to refine and improve the above formula. Possible criticisms of this approach may be:

1) It may not be possible to define each item for all types of IoT devices. E.g. the term C.S implies that the device is always connected to its environment, but it can be used also «offline»;

2) The information needed to define these terms may not be accessible. This would be possible not only technically, but because of restrictions imposed by the companies that own them. For example, let’s think about an investigation in Italy that requires data related to a journey of a Chinese car, stored in a server in that country.

5.

Conclusions ^

As «information» has many meanings, «quality of information» has many facets. Furthermore, in the process of building knowledge, complexity and uncertainty must always be taken into account. Technological innovation, such as human nature, are inevitable factors with unpredictable effects.

It has to be remarked that «quality of information» is crucial in Digital Forensics. Civil and criminal proceedings can be diverted from their fair conclusions by mistakes or misinterpretations of several actors: judges, defendants, prosecutors, police officers, consultants, witnesses. In this, digital evidence becomes more and more important not only because it is increasingly widespread, but also because of the ever-increasing skills required to face it.

Of the many challenges currently faced by Digital Forensics, IoT technologies bring the more concerning not only for practical causes, but also for theoretical reasons.

From a theoretical perspective, many questions need to be deepened. Aside the discussion between «data quality» and «information quality» approaches, it should be considered how «quality of information» evolves in different levels of «complexity» (human to human, human to machine, machine to machine). From this perspective, «information quality» could be considered an ethical value to pursue in the design of IoT devices and ecosystems.

From a practical perspective, we need to understand which type of data we can collect and how it’s been manipulated. From this perspective, «information quality» could be considered a kind of metadata: information about information. In a nutshell, in order to analyse IoT evidences, it is required not only information itself, but also the «information history», from the original source to the repository that we are collecting.

Based upon the above considerations, we analysed two model to address the issue of Information Quality Assessment in IoT Forensics. The first takes separately into account the two faces of the extracted information, namely as a rough set of data that has to be proved as thrustable, and as a possible evidence which has to be admitted in a court. We then exposed some reasons why this model had to be discarded, and proposed a second one, this time with a proper formula, where every component represent a different aspect of the IoT data which could have to be analysed. Its novelty certainly will lead to the possibility of suggestions for (even radical) corrections and improvements.

In the future we intend to deepen the implementation of such model of assessment, possibly with on-the-field testing. As an example, for every IoT must be possible defining the first four terms of the formula, since the last two only depends upon the observer.

6.

References ^

Aarts, Emile H. L./ Encarnação, José Luis (Eds.), True visions: the emergence of ambient intelligence, Springer, Berlin, 2006.

Adadi, Amina/ Berrada, Mohammed, Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI), IEEE Access, volume 6, 2018, p. 52138-52160.

Ashton, Kevin, That «Internet of Things» Thing, RFID Journal, 2009. https://www.rfidjournal.com/articles/view?4986 (accessed 7 January 2019).

Babar, Sachin/Mahalle, Parikshit/Stango, Antonietta/Prasad, Neeli/Prasad, Ramjee, Proposed Security Model and Threat Taxonomy for the Internet of Things (IoT). In: Meghanathan Natarajan, Boumerdassi Selma, Chaki Nabendu, Nagamalai Dhinaharan (Eds.), Recent Trends in Network Security and Applications Springer, Berlin, Heidelberg, 2010, p. 420-429.

Bar-Hillel, Yehoshua/ Carnap, Rudolf, Semantic Information, The British Journal for the Philosophy of Science, volume 4, issue 14, 1953, p. 147-157.

Batini, Carlo/Cappiello, Cinzia/Francalanci, Chiara/Maurino, Andrea, Methodologies for data quality assessment and improvement, ACM Computing Surveys, volume 41, issue 3, 2009, p. 1-52.

Beni, Majid Davoody, Syntactical Informational Structural Realism, Minds and Machines, volume 28, issue 4, 2018, p. 623-643.

Borgmann, Albert, Holding on to reality. The nature of information at the turn of the millennium, University of Chicago Press, Chicago, 1999.

Chen, Dong/Chang, Guiran/Sun, Dawei/Li, Jiajia/Jia, Jie/Wang, Xingwei, TRM-IoT: A trust management model based on fuzzy reputation for internet of things, Computer Science and Information Systems, volume 8, issue 4, 2011, p. 1207-1228.

Conti, Mauro/Dehghantanha, Ali/Franke, Katrin/Watson, Steve, Internet of Things security and forensics: Challenges and opportunities, Future Generation Computer Systems, volume 78, 2018, p. 544-546.

DeLone, William H./ McLean, Ephraim R., Information Systems Success: The Quest for the Dependent Variable, Information Systems Research, volume 3, issue 1, 1992, p. 60-95.

DeLone, William H./ McLean, Ephraim R., The DeLone and McLean Model of Information Systems Success: A Ten-Year Update, Journal of Management Information Systems, volume 19, issue 4, 2003, p. 9-30.

Dretske, Fred I., Knowledge & the flow of information, MIT Press, Cambridge (Mass.), 1981.

Floridi, Luciano (Ed.), The Onlife Manifesto. Being Human in a Hyperconnected Era, Open Access Springer International Publishing, Cham 2015.

Floridi, Luciano, The Philosophy of Information, Oxford University Press, Oxford, 2013.

Floridi, Luciano/ Illari, Phyllis, The philosophy of Information quality, Synthese library, 358, Springer, Berlin, 2014.

Friedewald, Michael/ Raabe, Oliver, Ubiquitous computing: An overview of technology impacts, Telematics and Informatics, volume 28, issue 2, 2011, p. 55-65.

Giacobbe, Maurizio/Di Pietro, Riccardo/Longo Minnolo, Antonino/Puliafito, Antonio, Evaluating Information Quality in Delivering IoT-as-a-Service, 2018 IEEE International Conference on Smart Computing (SMARTCOMP), 2018, p. 405-410.

Hansmann, Uwe, Pervasive computing handbook, Springer, Berlin-New York, 2001.

Hegarty, Robert/Lamb, David J./Attwood, Andrew, Digital Evidence Challenges in the Internet of Things, Proceedings of the Tenth International Network Conference (INC) 2014 School of Computing & Mathematics Plymouth University, Plymouth, 2014, p. 163-172.

Höller, Jan/Tsiatsis, Vlasios/Mulligan, Catherine/Karnouskos, Stamatis/Avesand, Stefan/Boyle, David, From machine-to-machine to the internet of things: introduction to a new age of intelligence, Academic Press, Amsterdam, 2014.

Hossain, M./Karim, Y./Hasan, R., FIF-IoT: A Forensic Investigation Framework for IoT Using a Public Digital Ledger, 2018 IEEE International Congress on Internet of Things (ICIOT) IEEE, 2018, p. 33-40.

ITU, Recommendation ITU-T Y.2060 Overview of the Internet of things, 2012.

Lundgren, Björn, Does semantic information need to be truthful?, Synthese, 2017.

Magruk, Andrzej, The Most Important Aspects of Uncertainty in the Internet of Things Field – Context of Smart Buildings, Procedia Engineering, volume 122, 2015, p. 220-227.

Meffert, Christopher/Clark, Devon/Baggili, Ibrahim/Breitinger, Frank, Forensic State Acquisition from Internet of Things (FSAIoT): A general framework and practical approach for IoT forensics through IoT device state acquisition, Proceedings of the 12th International Conference on Availability, Reliability and Security ACM, Reggio Calabria, Italy, 2017, p. 1-11.

Micic, Natasha/Neagu, Daniel/Campean, Felician/Zadeh, Esmaeil Habib, Towards a Data Quality Framework for Heterogeneous Data, 2017 IEEE International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), 2017, p. 155-162.

Palmer, Gary, A Road Map for Digital Forensic Research. Report From the First Digital Forensic Research Workshop (DFRWS), New York, 2001.

Povar, Digambar/ Geethakumari, G., A Heuristic Model for Performing Digital Forensics in Cloud Computing Environment. In: Mauri JaimeLloret, Thampi SabuM, Rawat DandaB, Jin Di (Eds.), Security in Computing and Communications, Communications in Computer and Information Science Springer Berlin Heidelberg, 2014, p. 341-352.

Shamala, Palaniappan/Ahmad, Rabiah/Zolait, Ali/Sedek, Muliati, Integrating information quality dimensions into information security risk management (ISRM), Journal of Information Security and Applications, volume 36, 2017, p. 1-10.

Simou, Stavros/Kalloniatis, Christos/Kavakli, Evangelia/Gritzalis, Stefanos, Cloud Forensics: Identifying the Major Issues and Challenges. In: Jarke Matthias, Mylopoulos John, Quix Christoph, Rolland Colette, Manolopoulos Yannis, Mouratidis Haralambos, Horkoff Jennifer (Eds.), Advanced Information Systems Engineering, Lecture Notes in Computer Science Springer International Publishing, 2014, p. 271-284.

Weaver, Warren, The Matemathics of Communication, Scientific American, volume 181, issue 1, 1949, p. 11-15.

Weiser, Mark, The computer for the 21st century, SIGMOBILE Mob. Comput. Commun. Rev., volume 3, issue 3, 1999, p. 3-11.

Wills, Gary B./Alenezi, Ahmed/Zulkipli, Nik/Huda, Nurul, IoT Forensic: Bridging the Challenges in Digital Forensic and the Internet of Things, Proceedings of the 2nd International Conference on Internet of Things, Big Data and Security Scitepress, 2017, p. 315-324.

Woodall, Philip/Borek, Alexander/Parlikad, Ajith Kumar, Data quality assessment: The Hybrid Approach, Information & Management, volume 50, issue 7, 2013, p. 369-382.

Yaqoob, Ibrar/Hashem, Ibrahim Abaker Targio/Ahmed, Arif/Kazmi, S. M. Ahsan/Hong, Choong Seon, Internet of things forensics: Recent advances, taxonomy, requirements, and open challenges, Future Generation Computer Systems, volume 92, 2019, p. 265-275.

Zareen, Muhammad Sharjeel/Waqar, Adeela/Aslam, Baber, Digital Forensics: Latest Challenges and Response, 2013 2nd National Conference on Information Assurance (NCIA) IEEE, Piscataway, NJ, 2013, p. 21-29.

Zawoad, S./ Hasan, R., FAIoT: Towards Building a Forensics Aware Eco System for the Internet of Things, 2015 IEEE International Conference on Services Computing IEEE, 2015, p. 279-284.

- 1 Digital Forensics has been defined «the use of scientifically derived and proven methods toward the preservation, collection, validation, identification, analysis, interpretation, documentation, and presentation of digital evidence derived from digital sources for the purpose of facilitation or furthering the reconstruction of events found to be criminal, or helping to anticipate unauthorized actions shown to be disruptive to planned operations» Palmer, A Road Map for Digital Forensic Research. Report From the First Digital Forensic Research Workshop (DFRWS), New York, 2001, (p. 23).

- 2 Simou/Kalloniatis/Kavakli/Gritzalis, Cloud Forensics: Identifying the Major Issues and Challenges. In: Jarke, M./Mylopoulos, J./Quix, C./Rolland, C./Manolopoulos, Y./Mouratidis, H. and Horkoff, J. (Eds.), Advanced Information Systems Engineering, Lecture Notes in Computer Science Springer International Publishing, 2014, p. 271-284 (p. 273).

- 3 Povar/Geethakumari, A Heuristic Model for Performing Digital Forensics in Cloud Computing Environment. In: Mauri, J./Thampi, S./Rawat, D. and Jin, D. (Eds.), Security in Computing and Communications, Communications in Computer and Information Science Springer Berlin Heidelberg, 2014, p. 341-352 (p. 344).

- 4 Adadi/Berrada, Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI), IEEE Access, volume 6, 2018, p. 52138-52160.

- 5 Hegarty/Lamb/Attwood, Digital Evidence Challenges in the Internet of Things. Proceedings of the Tenth International Network Conference (INC) 2014 School of Computing & Mathematics Plymouth University, Plymouth, 2014, p. 163-172, Hossain/Karim/Hasan, FIF-IoT: A Forensic Investigation Framework for IoT Using a Public Digital Ledger. 2018 IEEE International Congress on Internet of Things (ICIOT) IEEE, 2018, p. 33-40, Zawoad/Hasan, FAIoT: Towards Building a Forensics Aware Eco System for the Internet of Things. 2015 IEEE International Conference on Services Computing IEEE, 2015, p. 279-284, Meffert/Clark/Baggili/Breitinger, Forensic State Acquisition from Internet of Things (FSAIoT): A general framework and practical approach for IoT forensics through IoT device state acquisition. Proceedings of the 12th International Conference on Availability, Reliability and Security ACM, Reggio Calabria, Italy, 2017, p. 1-11.

- 6 Ashton, That «Internet of Things» Thing, RFID Journal, 2009.

- 7 Weiser, The computer for the 21st century, SIGMOBILE Mob. Comput. Commun. Rev., volume 3, issue 3, 1999, p. 3-11.

- 8 Hansmann, Pervasive computing handbook, Springer, Berlin; New York, 2001.

- 9 Aarts/Encarnação (Eds.), True visions: the emergence of ambient intelligence Springer, Berlin, 2006.

- 10 ITU, Recommendation ITU-T Y.2060 Overview of the Internet of things, 2012, Friedewald/Raabe, Ubiquitous computing: An overview of technology impacts, Telematics and Informatics, volume 28, issue 2, 2011, p. 55-65.

- 11 Zareen/Waqar/Aslam, Digital Forensics: Latest Challenges and Response. 2013 2nd National Conference on Information Assurance (NCIA) IEEE, Piscataway, NJ, 2013, p. 21-29.

- 12 For the sake of brevity, here we cannot deepen the concept of «quality». However, it can be said that such term is used in different disciplines (from business to philosophy) to compare a given object, often an empirical one, with a set of requirements. The term of comparison can be a technical standard, such as an ISO certification, or theoretical model, such as a set of abstract or «ethical» values.

- 13 https://www.iso.org/standard/50798.html.

- 14 DeLone/McLean, Information Systems Success: The Quest for the Dependent Variable, Information Systems Research, volume 3, issue 1, 1992, p. 60-95, DeLone/McLean, The DeLone and McLean Model of Information Systems Success: A Ten-Year Update, Journal of Management Information Systems, volume 19, issue 4, 2003, p. 9-30, Batini/Cappiello/Francalanci/Maurino, Methodologies for data quality assessment and improvement, ACM Computing Surveys, volume 41, issue 3, 2009, p. 1-52, Woodall/Borek/Parlikad, Data quality assessment: The Hybrid Approach, Information & Management, volume 50, issue 7, 2013, p. 369-382.

- 15 Shamala/Ahmad/Zolait/Sedek, Integrating information quality dimensions into information security risk management (ISRM), Journal of Information Security and Applications, volume 36, 2017, p. 1-10, Giacobbe/Di Pietro/Longo Minnolo/Puliafito, Evaluating Information Quality in Delivering IoT-as-a-Service. 2018 IEEE International Conference on Smart Computing (SMARTCOMP), 2018, p. 405-410.

- 16 Floridi (Ed.), The Onlife Manifesto. Being Human in a Hyperconnected Era, Open Access Springer International Publishing, Cham, 2015, Floridi, The Philosophy of Information, Oxford University Press, Oxford, 2013.

- 17 In the original exposition of Theory of Communication such concepts were expressed as different «levels», respectively as «technical», «semantic» and «influential» Weaver, The Matemathics of Communication, Scientific American, volume 181, issue 1, 1949, p. 11-15, (p. 11). Instead, cybernetics defined «technological», «natural» and «cultural» information. Borgmann, Holding on to reality. The nature of information at the turn of the millennium, University of Chicago Press, Chicago, 1999.

- 18 Lundgren, Does semantic information need to be truthful?, Synthese, 2017, Dretske, Knowledge & the flow of information, MIT Press, Cambridge, Mass., 1981.

- 19 Beni, Syntactical Informational Structural Realism, Minds and Machines, volume 28, issue 4, 2018, p. 623-643, Bar-Hillel/Carnap, Semantic Information, The British Journal for the Philosophy of Science, volume 4, issue 14, 1953, p. 147-157.

- 20 International Standards published by the International Organization for Standardization (ISO), i.e. ISO/IEC 27037:2012 — Information technology — Security techniques — Guidelines for identification, collection, acquisition, and preservation of digital evidence; ISO/IEC 27041:2015 — Information technology — Security techniques — Guidance on assuring suitability and adequacy of incident investigative methods; ISO/IEC 27042:2015 — Information technology — Security techniques — Guidelines for the analysis and interpretation of digital evidence; ISO/IEC 27050 — Information technology — Security techniques — Electronic discovery — Part 1 (2016): Overview and concepts and Part 3 (2017): Code of practice for electronic discovery.

- 21 Floridi/Illari, The philosophy of Information quality, Synthese library, 358, Springer, Berlin-Heidelberg, 2014.

- 22 Wills/Alenezi/Zulkipli/Huda, IoT Forensic: Bridging the Challenges in Digital Forensic and the Internet of Things. Proceedings of the 2nd International Conference on Internet of Things, Big Data and Security Scitepress, 2017, p. 315-324, Conti/Dehghantanha/Franke/Watson, Internet of Things security and forensics: Challenges and opportunities, Future Generation Computer Systems, volume 78, 2018, p. 544-546.

- 23 Few authors have talked suck issue, see Magruk, The Most Important Aspects of Uncertainty in the Internet of Things Field – Context of Smart Buildings, Procedia Engineering, volume 122, 2015, p. 220-227.

- 24 https://www.google.com/maps/timeline.

- 25 We presume that the forensic software is compliant with all Digital Forensics procedures and standard.

- 26 It has to be taken into account, in two separate evaluation pipelines, both the quality of the data (i.e. low noise, reliable source, etc) and its admissibility as evidence in court (i.e. acquired according to the Best Practices). Data which pass through this double filter are suitable to be used in the forensics analysis. Although feasible in theory, its practical application can leads to some undesirable drawbacks: unnecessary loss of time and discarding important evidences.

- 27 Yaqoob/Hashem/Ahmed/Kazmi/Hong, Internet of things forensics: Recent advances, taxonomy, requirements, and open challenges, Future Generation Computer Systems, volume 92, 2019, p. 265-275, Höller/Tsiatsis/Mulligan/Karnouskos/Avesand/Boyle, From machine-to-machine to the internet of things: introduction to a new age of intelligence, Academic Press, Amsterdam, 2014.

- 28 Micic/Neagu/Campean/Zadeh, Towards a Data Quality Framework for Heterogeneous Data. 2017 IEEE International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), 2017, p. 155-162, Chen/Chang/Sun/Li/Jia/Wang, TRM-IoT: A trust management model based on fuzzy reputation for internet of things, Computer Science and Information Systems, volume 8, issue 4, 2011, p. 1207-1228, Babar/Mahalle/Stango/Prasad/Prasad, Proposed Security Model and Threat Taxonomy for the Internet of Things (IoT). In: Meghanathan, N./Boumerdassi, S./Chaki, N. and Nagamalai, D. (Eds.), Recent Trends in Network Security and Applications Springer, Berlin, Heidelberg, 2010, p. 420-429.