1.

Introduction ^

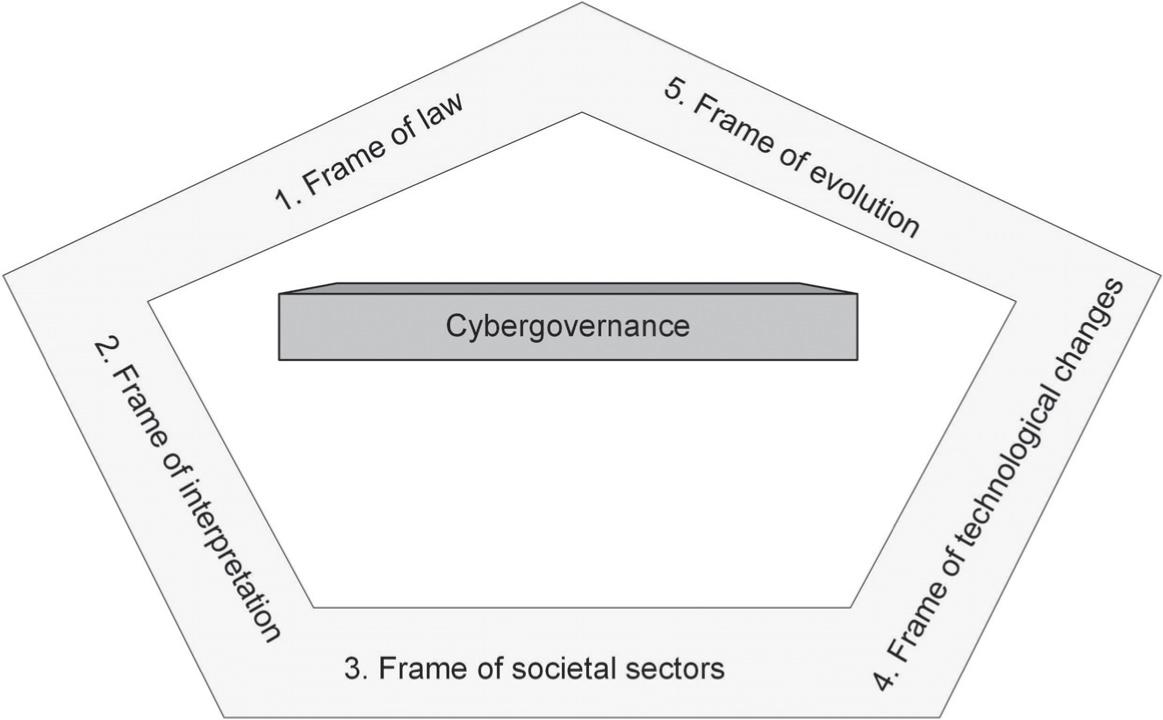

Five societal frames of cybergovernance are distinguished in this article: (1) law; (2) legal interpretation; (3) societal sectors; (4) technological changes; and (5) evolution (Figure 1). Each of these is described below. In cognitive linguistics and empirical semantics, a ‘frame’ means “any system of concepts related in such a way that to understand one of them you have to understand the whole structure in which it fits” [Fillmore 2006 (1982), 373].

Figure 1: Five societal frames of cybergovernance

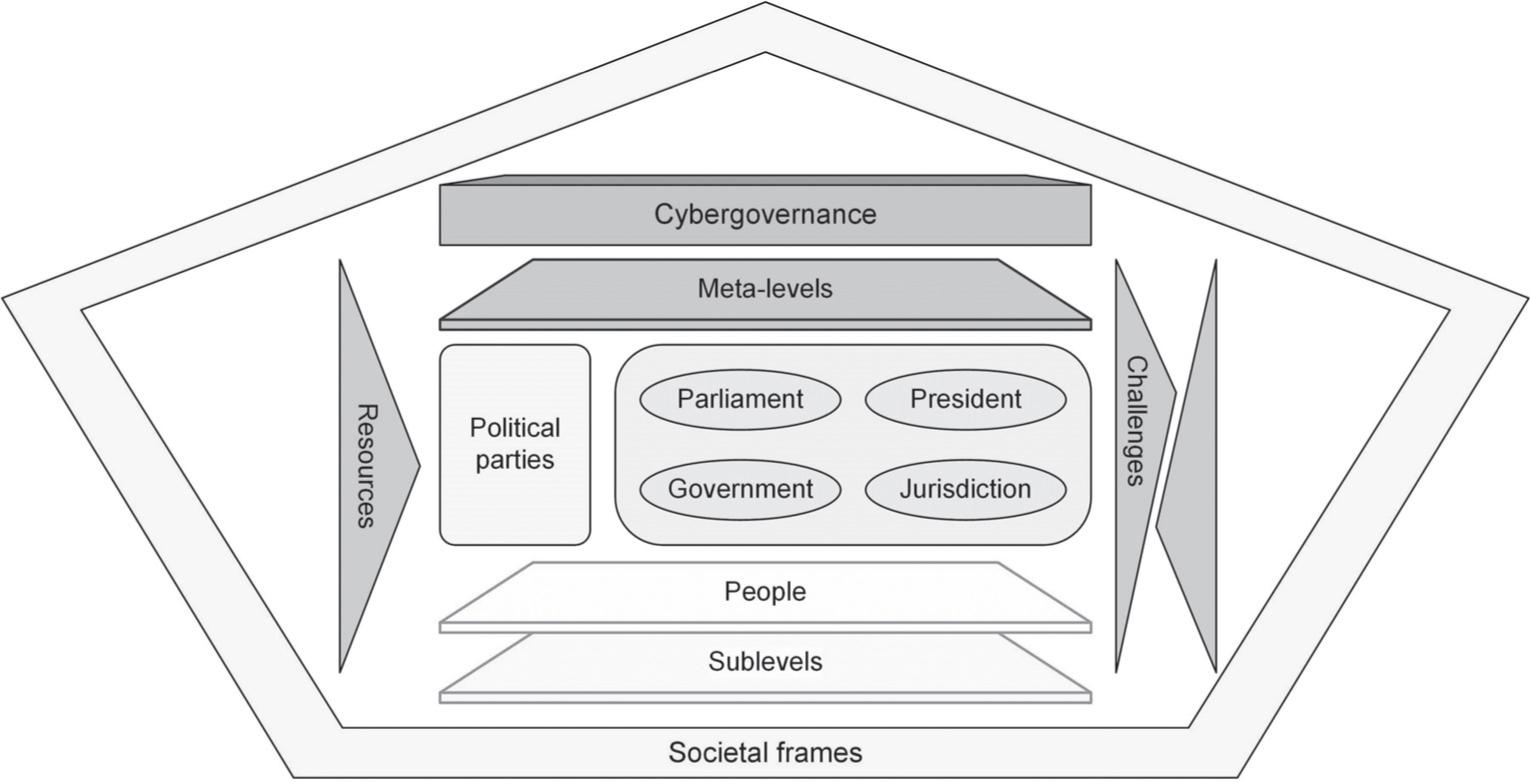

Cybergovernance and other internet-related phenomena have emerged with a certain landscape, comprising several societal elements or layers. The first is the nation state, with the classical powers: the parliament, the government, the judiciary, and the president. The next layer contains political parties, and the next the people. In addition, there are meta-levels that involve politics, doctrines, principles, etc., and sublevels including criminality. A further element relates to resources. We also need to take into consideration challenges such as rivalry, wars and other conflicts. Cybergovernance is the final element to consider. A schema of these elements is shown in Figure 2. Five different societal frames are explored in this work.

Figure 2: The landscape in which cybergovernance has emerged

2.1.

Frame of law ^

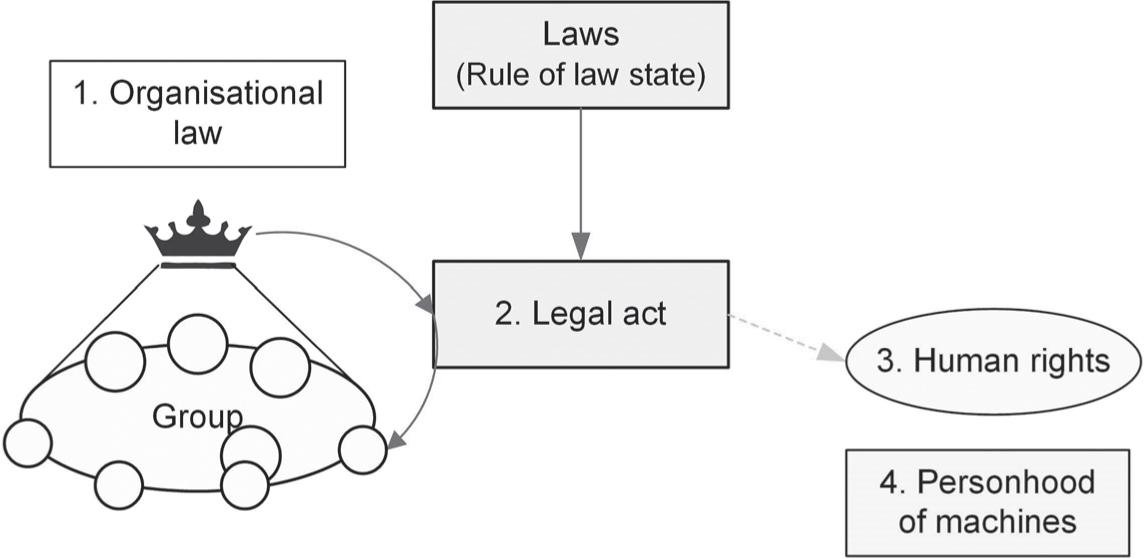

The first frame, the frame of law, concerns the evolution of the law and consists of four elements (Figure 3):

1. Organisational law, for example the way in which the “king” is determined.

2. Legal acts under the rule of law and criteria for applying the law.

3. Human rights.

4. Laws on machines and the personhood of machines.

Figure 3: The frame of law

Organisational law is the first element in this frame. A group of human beings (e.g. a tribe, a population, a nation or a company) selects a representative for the group, referred to here as the “king”. Organisational law regulates how the king is selected. The concept of a legal act forms the second element in this frame. The king and his officials issue legal acts, and from the 16th century, there have been laws to determine these legal acts. Plato called such laws nomói. Since the 1850s, we have had the concept of the state, which is subject to the rule of law. The third element in the frame of law is human rights. Outdated conceptions of the law assume that laws apply only to citizens, but human rights apply to all humans; since the 1950s, the concept of human rights has been accepted (UN Universal Declaration of Human Rights, UDHR, 1948; European Convention on Human Rights, ECHR, 1950). The fourth element concerns the legal subjectivity of machines. The subjects of the law are humans rather than machines. Legal subjects include both natural persons and legal persons; machines are treated as objects, which are not capable of rights and duties. Liability is imposed on the owner of a machine (owner’s liability) or the manufacturer (product liability). However, in recent decades there have been discussions of the legal personality of synthetic persons, such as avatars in three-dimensional online virtual worlds and robots in the real world (see e.g. Schweighofer 2007; Bryson 2017; Solaiman 2017). In summary, the first frame is important in terms of showing the dynamics of the law.

2.2.

Frame of legal interpretation ^

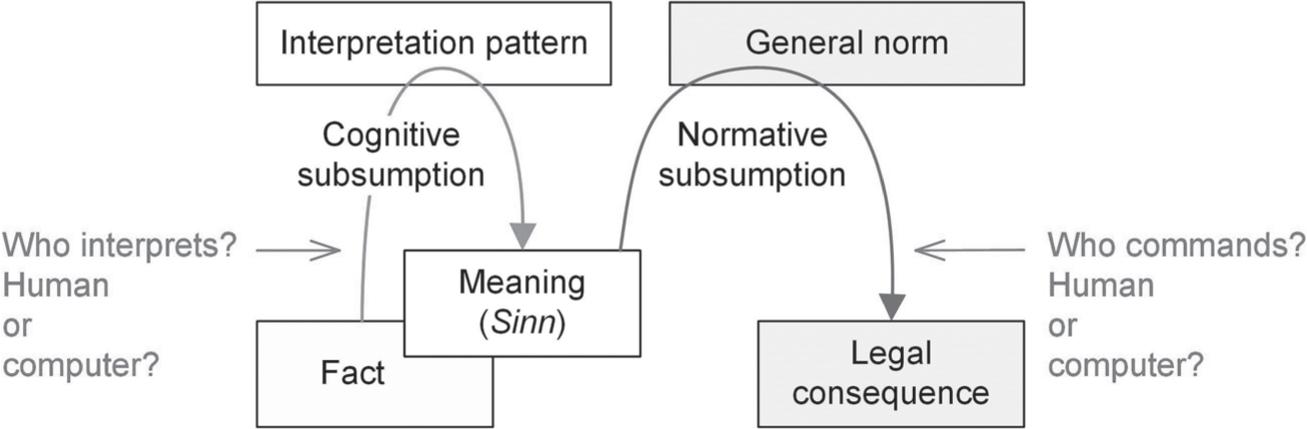

The second frame, that of legal interpretation, is based on legal subsumption. Subsumption refers to the application of the law, or more precisely the application of a norm to a fact, thus giving rise legal qualification. Subsumption concerns the relation between a fact and a normative condition, and can be divided into two steps: cognitive (also known as factual or terminological) subsumption and normative subsumption (Figure 4).

Figure 4: The frame of interpretation, which focuses on the subsumption of a fact under a norm. A legal consequence arises in the form of a decision

In cognitive subsumption, a legal fact is related to its legal meaning; in other words, the facts of a case are transformed into legal terms. Suppose that an action, a, is treated as a theft, A, rather than a burglary. The second step is normative subsumption, in which the general norm is applied and a legal consequence arises in the form of a decision. Thus, subsumption can be related to inference with modus ponens. Legal qualification, which results in the subsumption procedure, is central to ontologies in law. For example, in relation to the question of whether a killing (world knowledge) is murder, legal sanction in the form of an execution or an allowed act within an international armed conflict is given only based on the legal qualification of the act. Hence, subsumption is of key importance when judging a violation. Note that the factual situation can be subsumed under different general norms.

A key question is that of who interprets the facts in subsumption: a human or a machine (see Figure 4). A typical example of a machine interpreter is a road radar system. In the case where a driver exceeds the speed limit (cognitive subsumption), the road radar system takes a photograph and sends it to a database to assign a penalty (normative subsumption). Thus, the radar system replaces the police officer or judge who would traditionally interpret the speed limit violation (cognitive subsumption) and assign a penalty (normative subsumption). In cybergovernance, competence in legal activities can be transferred from human to machine to carry out cognitive subsumption, normative subsumption or both. This transferring of competence can occur in both real-world activities and three-dimensional virtual world activities.

2.3.

Frame of societal sectors ^

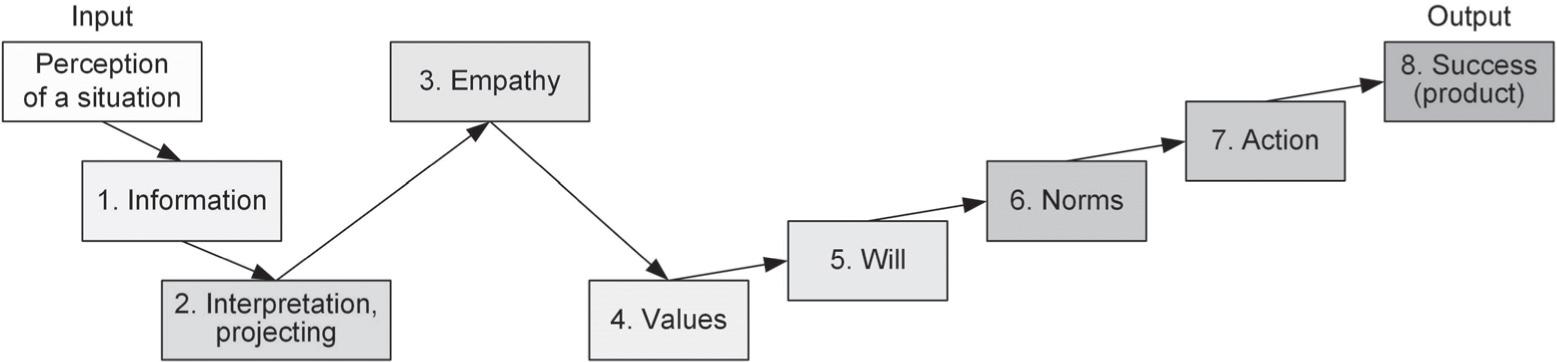

Before listing the societal sectors with which we are concerned, we analyse several situations and the reaction of a subject who perceives a situation. The subject’s perception of a situation starts with the input of information (see element 1, ‘Information’ in Figure 5). The subject interprets the information (see element 2, ‘Interpretation’). During this interpretation, projection may also take place. Empathy is important for perception (see element 3, ‘Empathy’). After the subject has created a conception of the situation, positive or negative empathy is significant in terms of the shaping of the conception by the individual. Next, an evaluation is carried out of the values that are imputed to the situation (see element 4, ‘Values’), and the subject may evaluate the situation either positively or negatively. The next element to consider is the will, i.e. what the subject does and does not intend (see element 5, ‘Will’). Then, we need to take into account other people’s normative judgements of the situation (see element 6, ‘Norms’). The subject then takes an action (see element 7, ‘Action’). The last element is the success of the subject (see element 8, ‘Success’). Success is the product or output of the reactive path.

Figure 5: Perception of a situation by a subject: a reactive path

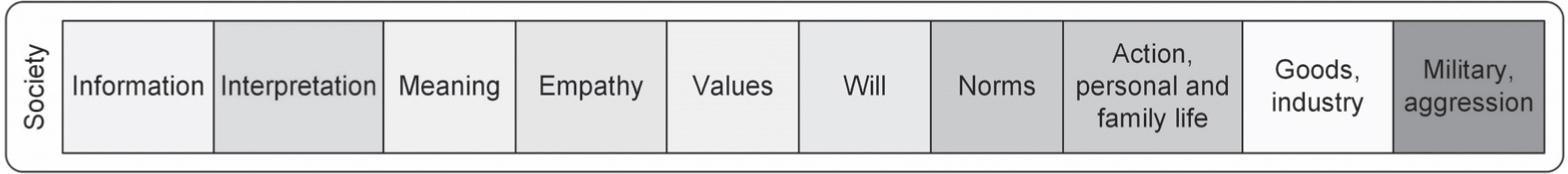

The societal sectors correspond to the layers in Figure 5, and these are shown in more detail in Figure 6. Information, the first sector, has several sub-sectors that shape information for society, such as science and the mass media. The second sector, interpretation, contains subsectors that act to interpret information, such as religion and ideology. The third sector moulds meaning for society, and includes imagination, which is binding for society. The next sector, empathy, shapes sympathy (alpha personalities) or antipathy (omega personalities), and the subsequent sector relates to societal values. The sixth sector, entitled will, relates to the production of societal intentions, and includes political parties. The next sector, norms, concerns normativity, and the following sector, action, relates to private life and family. The ninth sector, goods, comprises business and industries, while last sector, the military, deals with aggression and violent behaviour.

Figure 6: Societal sectors

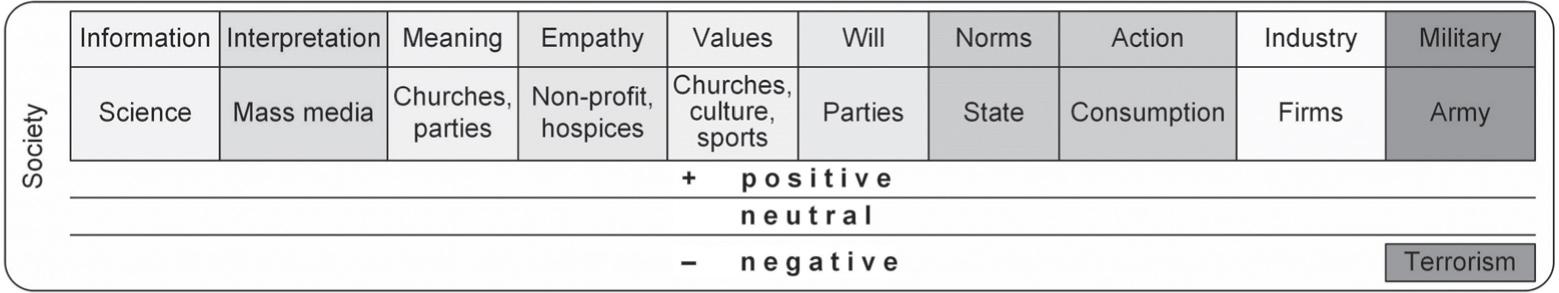

Figure 7: Organisations that frame societal sectors

There are organisations that are connected with each of these societal sectors (Figure 7). In the information sector, for example, there are scientific organisations such as academies of science, scientific societies and scientific museums.

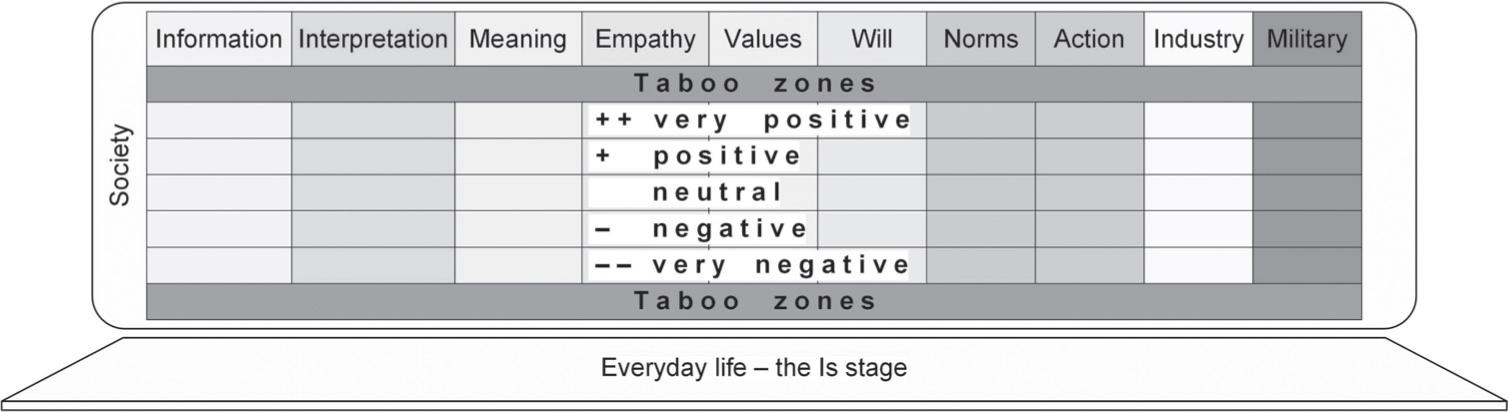

Everyday activities in each societal sector can be evaluated vertically, for example as neutral, positive, very positive, negative, or very negative (Figure 8). There are also taboo levels, which relate to phenomena that are not normally spoken about in society.

Figure 8: The frame of societal sectors

2.4.

Frame of technological changes ^

The fourth frame, technological changes, includes the changes introduced by the Internet (Figure 9). Particular attention should be paid to the changes in the role of computer systems. One trend is that computers may become legal machines, which can take decisions with legal importance. There are four domains in the cyber world: (i) the physical domain; (ii) the information domain; (iii) the cognitive domain; and (iv) the social domain [Collier et al. 2013].1 The emergence of new technologies such as artificial intelligence (AI), the Internet of Things (IoT) and robotics poses new risks, and there are specific concerns regarding AI systems (see e.g. [EC 2020] for the requirements for trustworthy AI).

Figure 9: The frame of technological changes

2.5.

Frame of evolution ^

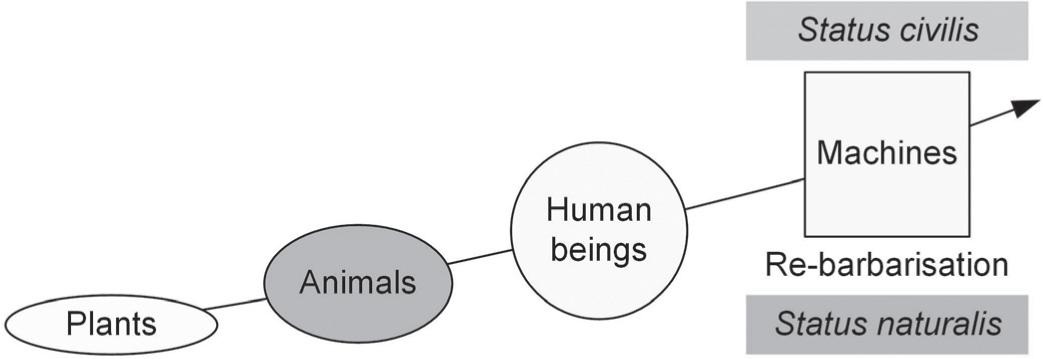

The fifth frame, the frame of evolution, focuses on the role of cybergovernance in biological evolution. For example, consider the line of evolution from plants to animals to human beings to machines, as shown in Figure 10. In the proposed model, biological evolution leads to the development of human beings. The last step, the evolution from humans to machines, however, is a process of technological evolution in which humans produce machines. Moreover, humans strive to give human capabilities to their creatures, thus making machines artificially intelligent, a situation that is reminiscent of the ancient myth of Pygmalion and its modern variations.

One question associated with the evolutionary step from humans to machines is whether machines reside in status civilis or status naturalis. A relapse to status naturalis is a permanent temptation of modern culture, although re-barbarisation is a kind of political atavism. Weapons are substitutes for the former raptors. The elements of re-barbarisation can, for example, be observed in EVE Online, a massive multiplayer online game (MMOG). In EVE Online, players take part in “harsh, unforgiving gameplay that lacks many amenities for players that other games of the genre possess”.2 We, however, maintain that machines have to be not monsters.

Figure 10: Frame of evolution [Čyras/Lachmayer 2020]

A computer can act as a legal machine whose purpose is regulation via computer code. The program embodies legal rules, and the normative and digital expectations are amalgamated. Karavas [2009, 464] argues that “the emergence of the computer as medium has triggered a transformation of the legal sphere that is culminated in the emergence of a techno-digital normativity that seems to undermine Luhmann’s description of the legal system as an autonomous social system.” The risks brought in by computers, and the dangers of artificial intelligence, can be viewed from the perspective of programming (see e.g. [Parnas 2017]) and from the perspective of law; see e.g. [Graber 2020]. Christoph Graber formulates recommendations to avoid the dangers of platforms using AI in relation to fundamental rights.3

Electronic virtualities (e-virtualities). In the evolutionary step from humans to machines, the evolution of spiritual spheres can also be observed. We can see the line of spiritual evolution from animism to magic to religion to e-virtuality. Technologies enable old animistic and magic dreams like television, which can be viewed in both macrocosm and microcosm; for instance, an image projector can imitate the cave paintings of Altamira.

There are several reasons to explore virtuality in its multiple senses. Virtualities are natural to society. First, there are illusionary worlds, for example the three-dimensional virtual worlds. Secondly, unconscious (types of unconscious phenomena) and abstract entities can be investigated with scientific methods. The worlds of the ego and id are investigated here. Next, cybergovernance moves real-world activities to cyberspace, for instance to computerised virtual worlds. E-government applications enable legal machines to perform functions of the state. Finally, the militarisation of the state seeks enemies. The notion of external enemies is supplemented with the notion of internal enemies, and propaganda wars are carried out alongside virtual wars.

Losing touch with reality. Electronic virtualities introduce the risk of losing touch with reality and failing to see the horizon. This is also the case for virtual words and computer games. Here, the reader may recall the allegory of Plato’s Cave (see e.g. Wikipedia at https://en.wikipedia.org/wiki/Allegory_of_the_cave). What is reported in the press, television and social networks can be compared with the shadows that are projected on the wall of the cave; these shadows form reality for the watchers, but are not accurate representations of the real world. Nowadays, the risk has emerged of losing control over reality and of living in an illusory reality.

3.

On the word ‘cybergovernance’ ^

The word ‘cyber’ can be attached to almost anything to make it sound futuristic or technical. Some prefer the shorter and more efficient ‘e-’ prefix, and the word ‘cybergovernance’ may therefore be interpreted as ‘e-governance’ or ‘electronic governance’. Wikipedia contains a definition of electronic governance.4

4.

Legal personhood of electronic persons ^

Schweighofer [2007, 18] states that “legal systems have to consider giving intelligent agents or robots some form of ‘limited’ legal personality in order to allow the application of the concepts of representation and responsibility.” The question is to what level such a ‘limited’ legal personality can extend.

Solaiman [2017, 172] maintains that “robots are presently recognised as a product or property at law”. His review of a legal personhood is concerned mainly with industrial robots, and concludes that “robots are ineligible to be persons, based on the requirements of personhood” [ibid., 155, emphasis added]. Solaiman [2017, 161] formulates three attributes of legal personhood, including “the ability to exercise rights and to perform duties”. Bryson et al. [2017, 274] refer to Solaiman and stress two requirements for a legal person: first, “it is able to know and execute its right as a legal agent” and, second, “it is subject to legal sanctions ordinarily applied to humans”. Bryson et al. [2017, 289] argue that “conferring legal personality on robots is morally unnecessary and legally troublesome.” Bryson et al. [2017, 275] suggest that there is no moral obligation to recognise the legal personhood of artificial intelligence, and “recommend again the extension of legal personhood to robots, because the costs are too great and the moral gains are too few.” Bryson et al. hold that legal personhood is all or nothing; conferring legal obligations on machines require procedures to enforce them.7 It remains unclear how to operationalise dispute settlement procedures.8

Solaiman [2017, 170] presents an argument that “as the law presently regards, there is no ‘in-between’ position of personhood […] because entities are categorized in a simple, binary, ‘all-or-nothing’ fashion”. However, Bryson et al. [2017, 280] argues for legal personality to be divisible.9 We agree that the legal position of a legal machine in the role of a governance body requires regulation by the law. Weng et al. [2019] suggest that with respect to rights and responsibilities a separate set of laws would be needed for robots.

5.

References ^

- Beattie, Scott, Community, Space and Online Censorship: Regulating Pornotopia. Routledge, London and New York 2009. DOI: 10.4324/9781315573007.

- Bryson, Joanna J./Diamantis, Michailis E./Grant, Thomas D., Of, For, and By the People: The Lacuna of Synthetic Persons, Artificial Intelligence and Law, volume 25, issue 3, 2017, pp. 273–291. DOI: 10.1007/s10506-017-9214-9.

- Collier, Zachary A./Linkov, Igor/Lambert, James H., Four Domains of Cybersecurity: A Risk-based Systems Approach to Cyber Decisions, Environment Systems and Decisions, volume 33, 2013, pp. 469–470. DOI: 10.1007/s10669-013-9484-z.

- Čyras, Vytautas/Lachmayer, Friedrich, Legal Visualisation in the Digital Age: From Textual Law towards Human Digitalities. In: Hötzendorfer, Walter/Tschohl, Christof/Kummer, Franz (Eds.), International Trends in Legal Informatics: Festschrift for Erich Schweighofer, Editions Weblaw, Bern 2020, pp. 61–76.

- Dodge, Martin/Kitchin, Rob, Mapping Cyberspace. Routledge, London and New York 2001.

- EC: Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-assessment. European Commission 2020. DOI: 10.2759/002360, https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=68342.

- Fillmore, Charles J., Frame Semantics. In: Geeraerts, Dirk (Ed.), Cognitive Linguistics: Basic Readings, Mouton de Gruyter, Berlin 2006, pp. 373–400. Originally published in Linguistics in the Morning Calm, Linguistic Society of Korea (Ed.), Hanshin Publishing Company, Seoul 1982, pp. 111–137.

- Graber, Christoph B., Artificial Intelligence, Affordances and Fundamental Rights. In: Hildebrandt, Mireille/O’Hara, Kieron (Eds.), Life and the Law in the Era of Data-Driven Agency, Edward Elgar, Cheltenham 2020, pp. 194–213. DOI: 10.4337/9781788972000.00018. Available at SSRN: https://ssrn.com/abstract=3299505.

- Karavas, Vaios, The Force of Code: Law’s Transformation under Information-Technological Conditions, German Law Journal, volume 10, issue 4, 2009, pp. 463–482. DOI: 10.1017/S2071832200001164.

- Schweighofer, Erich, E-Governance in the Information Society. In: Schweighofer, Erich (Ed.), Legal Informatics and e-Governance as Tools for the Knowledge Society, LEFIS Series 2, 2007, pp. 13–23.

- Solaiman, S. M., Legal Personality of Robots, Corporations, Idols and Chimpanzees: A Quest for Legitimacy, Artificial Intelligence and Law, volume 25, 2017, pp. 155–179. DOI: 10.1007/s10506-016-9192-3.

- Weng, Yueh-Hsuan/Izumo, Takashi, Natural Law and its Implications for AI governance, Delphi – Interdisciplinary Review of Emerging Technologies, volume 2, 2019, no. 3, pp. 122–128. DOI: 10.21552/delphi/2019/3/5.

- 1 “The physical domain includes hardware and software and networks as building blocks of cyber infrastructure […] Monitoring, information storage, and visualization are features of the information domain […] Information should be properly analyzed and sensed as well as used for decision making in the cognitive domain […] Decisions on cybersecurity should be consistent with social, ethical, and other considerations that are characteristic on their developing social domain” Collier et al. [2013, 469–470].

- 2 Radosław Pałosz continues: “Game authors encourage a play style that would be treated as illegal or unethical in real life. EVE’s world, because of its complexity, allows sophisticated frauds like financial pyramids identical in their essence to those known from real life to happen in-game” (see Pałosz’s abstract “Virtual World as a State of Nature: Rule-creating Activity of MMOG Players” at the Special Workshop 3 Artificial Intelligence and Digital Ontology in conjunction with the IVR World Congress 2019 in Lucerne).

- 3 Graber [2019] begins with the observation that “[t]he expansionism of giant platform firms has become a major public concern, an object of political scrutiny and a topic for legal research. As the every-day lives of platform users become more and more “datafied”, the “power” of a platform correlates broadly with the degree of the firm’s access to big data and artificial intelligence (AI).” Graber writes about the affordances (the possibilities and constraints of a technology) and concludes with four recommendations concerning AI.

- 4 “Electronic governance or e-governance is the application of IT for delivering government services, exchange of information, communication transactions, integration of various stand-alone systems between government to citizen (G2C), government-to-business (G2B), government-to-government (G2G), government-to-employees (G2E) as well as back-office processes and interactions within the entire government framework”; see Wikipedia at https://en.wikipedia.org/wiki/E-governance with reference to Saugata, B./Masud, R.R. (2007), Implementing E-Governance Using OECD Model (Modified) and Gartner Model (Modified) Upon Agriculture of Bangladesh. Wikipedia continues: “Through e-governance, government services are made available to citizens in a convenient, efficient, and transparent manner. The three main target groups that can be distinguished in governance concepts are government, citizens, and businesses/interest groups”; see Garson, D.G. (2006), Public Information Technology and E-Governance.

- 5 ‘Cyberspace’ means, literally, ‘navigable space’ (from the Greek kyber – “to navigate”); see Beattie [2009, 94] referring to Dodge and Kitchin [2001, 1].

- 6 Dodge and Kitchin [2001, 1] write: “[Cyberspace] is a virtual space created by global computer networks connecting people, computers and documents in the entire world and creating space that we can move in. Cyberspace is an interesting challenge for the space philosophy. It is made of billions of binary figures, it exists in various forms including web-pages, chat rooms, bulletin boards, virtual spaces, databases each of them with its own geography. The cyberspace consists of many spaces produced by their designers and in many cases by their users. They only accept formal properties of “geographic” (Euclid’s) space if they are thus programmed. Besides, the spaces are often only visual, the objects have no weight or mass and their spatial establishment is not safe – they can appear and disappear in a moment.”

- 7 Bryson et al. [2017, 282] write: “Just as legal rights mean nothing if the legal system elides the standing to protect them, legal obligations mean nothing in the absence of procedure to enforce them.”

- 8 Bryson et al. [2017, 288] continue: “Giving robots legal rights without counterbalancing legal obligations would only make matters worse … This would not necessarily be a problem, if 1. the other problems of legal personality-like standing and availability of dispute settlement procedures-were solved; and 2. the electronic legal person were solvent or otherwise answerable for rights violations. But it is unclear how to operationalize either of these steps.”

- 9 Bryson et al. [2017, 280] write: “Legal personhood is not an all-or-nothing proposition. Since it is made up of legal rights and obligations, entities can have more, fewer, overlapping, or even disjointed sets of these.”