1.

Motivation ^

In the area of legal visualization, several proposals have been made in the last years for approaches that ease the interaction with legal scenarios and help legal experts and laymen alike to understand complex legal relationships, cf. [Fill/Grieb 2017], [Fill/Haiden 2016], [Haapio 2011]. The forms of these representations stretch from data visualizations, diagrams, and pictures to photos, screenshots and comics [Mielke et al. 2017]. One aim of many legal visualizations is to support users in solving concrete legal cases, e.g., in the domain of contract law [Haapio 2011] or tenancy law [Kahlig 2014]. This permits to analyze a given legal situation by reverting to corresponding visual representations and derive potential actions. For this purpose, various forms of diagrams as well as specific types of visualizations, e.g., in the form of comics, have been proposed. In addition, legal visualization is often characterized by high-interdisciplinarity, bringing together researchers from the domains of law, business, computer science or business informatics to design and implement new forms of representations and by using a large set of technologies, e.g. [Fill 2007], [Heddier et al. 2012]. Following this tradition of inter-disciplinarity, we propose in this paper to employ the technology of augmented reality to be used for embedding legal visualizations into the work practices of users. In this way we envisage to make legal visualizations directly available in the realm of users having to deal with a particular legal scenario and attaching it to a situational context. For discussing this idea in-depth, we will first describe in Section 2 the foundations of augmented reality and which potential this technology provides for merging virtual, electronic visual representations with the real world and for providing new forms of so-called device-less interaction. In Section 3 we will advance by illustrating how the aspects context, content, and interactions need to be considered for augmented reality enhanced legal visualizations. This will permit us to draw a first conclusion and present the next steps for future research in Section 4.

2.

Foundations of Augmented Reality ^

Augmented Reality (AR) is a technology that allows virtual images generated by a computer to be overlaid with physical objects in real time [Zhou 2006]. A generally accepted definition of AR comes from Azuma [Azuma 1997]. He describes AR as a technology that combines the real world and virtual imagery, is interactive in real time and registers virtual imagery with the real world. Besides AR there are other concepts and technologies to extend a real or virtual world. These are virtual reality (VR) and mixed reality (MR). The three terms AR, VR and MR can further be summarized under the generic term ‘extended reality’ (XR) [Oberhauser/Pogolski 2019]. While the technology of VR can be clearly distinguished from AR and MR by completely isolating the user from reality and by a high degree of immersion, the distinction between AR and MR is not straightforward. To simplify things, we can see MR as an enhanced and more interactive version of AR. In the further course of this paper, we will only discuss augmented reality.

If we look at the components of AR on a higher level, we can divide AR technology into different electronic sensors for input and output of information and components for processing this information. The output information can be visual, acoustic or haptic, whereby the visual output is certainly the main part in AR. For information input, different sensors are needed to make the overlapping of real and virtual objects in AR as natural as possible and to sense and monitor the environment. These sensors must be able to detect motion, orientation and the objects of the environment. For motion sensors, we can distinguish between acceleration i.e., linear motion on the x-, y- or z-axis, and rotational motion on all 3D-axes. Orientation can be calculated from a fixed magnetic point, i.e., typically magnetic north. In combination, such sensors help to track the motion and orientation of the AR device, and thus the user, and adjust the representation of the virtual objects. The environment is composed of objects in real space, their depth mapping, as well as the light conditions or color mapping. This is typically sensed via one or multiple camera sensors whose information is accordingly processed. If all these components are present, a realistic combination of the real world and virtual objects can be achieved.

The application of augmented reality has been explored in various areas such as personal information systems, industrial and military applications, medical applications, AR for entertainment or AR for the office [van Krevelen/Poelman 2010]. If we regard AR environments on a functional level, we can classify them in two broad categories [Hugues et al. 2011]: The augmented perception and the artificial environment. The first category emphasizes that AR provides a decision-making support tool. It can provide information that allows a better understanding of reality and that will ultimately optimize our actions in relation to reality. This functionality can be divided into further sub-functionalities, ranging from information expansion to the replacement of reality by virtual objects. Thereby, the visualization of objects and relations only recognizable via AR is enabled. The second category concerns artificial environments. This means environments that do not represent reality as it is perceived but augment it by information that is not perceivable yet could exist in a reality of the future or the past. An example would be the visualization of a church tower that collapsed a long time ago.

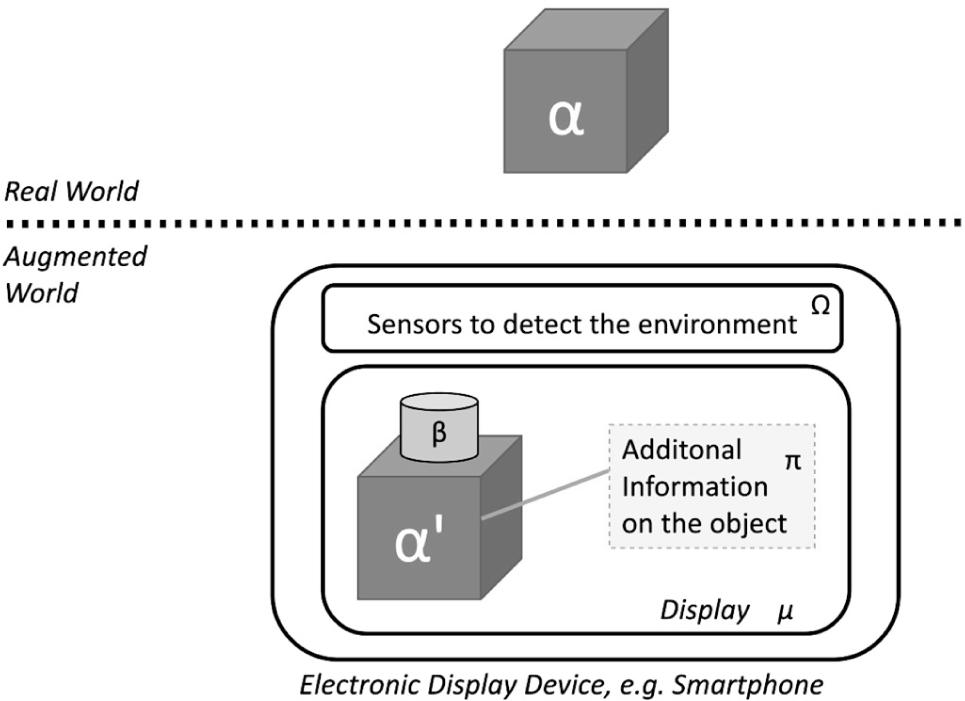

For enabling AR functionalities diverse types of output devices exist. Thereby, different sensors (Ω) can be used for capturing the reality (α) and the environment of the user. Then, an output device is used to show an image of the real environment (α‘) and the additional virtual objects (β) on a display (µ). In addition to virtual objects, information (π) can be projected on real or virtual objects (see Figure 1).

Figure 1: Example of an AR environment

In terms of technical realization, different aspects must be considered for the development of the respective applications on different devices. Two main technologies can be distinguished. First, output via a device with a screen, for example a smartphone or a VR headset. These have integrated cameras and other sensors to capture the environment. The second technology are see-through holographic lenses. These permit the user to see the real world nearly without any restrictions and embeds the virtual objects into reality by means of holographic projection, based on the measurement of different sensors. Some devices even offer the projection directly into the eye. In terms of development, again, two technologies have to be distinguished. Platform-dependent development and platform-independent development. Platform-dependent development requires particular software platforms. For example, Unity1, Unreal2 or OpenXR3 can be used for the development of platform-dependent AR applications. Platform-independent development approaches typically run in browser-based environments. One example is the WebGL application interface4. In combination with an overlay framework like three.js5 for easy access to WebGL, augmented reality applications can be developed and used independent of any platform, and thus on any AR device.

3.

Legal Visualizations and Augmented Reality ^

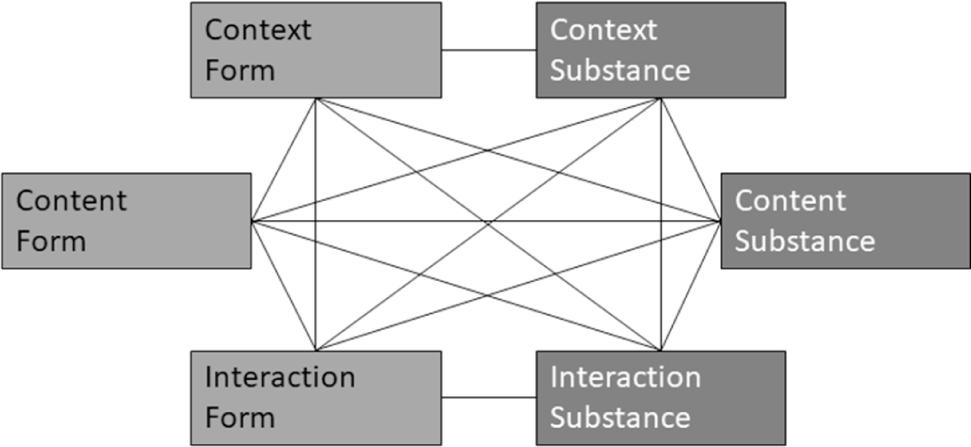

For bringing together legal visualizations and augmented reality, we present in the following the first conception of a framework that describes which aspects need to be considered based on the properties of augmented reality applications. This permits us to investigate how to transition existing legal visualizations into the realm of augmented reality or to design new types of legal visualizations with this technology. For all parts of the framework, we distinguish between the form that is required to convey information and the substance of information that is conveyed via the form. We further consider three aspects that we deem relevant for augmented reality: context, content, and interaction.

Figure 2: Concept of context, form, and interaction

In terms of the context, augmented reality applications embed virtual representations in the real-world context. Thereby the form of the context must be recognized as well as its substance. For considering the context, augmented reality applications can monitor the environment via cameras and other sensors and analyze where the user is located, which objects are currently within the visible space of the user and what properties these objects have, i.e., the form of the context. The augmented reality application can then infer not only the existence of objects but may also classify them further based on additionally perceived attributes, e.g., the current state of a machine or the actions of a person. For this it needs to interpret the perceived attributes and embed them into previously stored knowledge to derive the context substance.

Joining the context aspect with legal visualizations permits tailoring the display of a legal visualization to the context that a user is currently positioned in. For example, we imagine that an augmented reality application assesses that a user is located at a particular position of a factory and may require information about legal regulations at that position – e.g., the health and safety regulations for that factory area. The application could then decide which object that it recognized is found suitable for projecting the legal visualization onto it for explaining the relevant regulations.

The content aspect focuses on the information that is presented to the user via the augmented reality application. Again, we distinguish between the form of the content, i.e., the kind of visual representation that is used to transport the information, e.g., an image, a diagram or a three-dimensional representation – and the substance of the content, i.e., the nature of the information, e.g., numeric data, procedures, textual information, etc. The content may thereby be dynamically created or static. For creating the form of the content, it needs to be reverted to the field of computer graphics where the technical specification of graphical objects and their display on the augmented reality hardware is investigated. Due to the recent developments in this area, it can today be chosen from a wide range of application programming interfaces (API) that greatly ease the specification of such graphical objects. Regarding the substance of the content, it must be decided, what is required in the current context and how it can be tailored to the needs of a user.

For legal visualizations it may be necessary to adapt their content to the specific requirements of the augmented reality environment. For example, many legal visualizations are not available in 3D formats that are required for projecting the information into the real-world three-dimensional space. Thus, the form of these visualizations must be transitioned in an adequate manner, e.g., by projecting them onto a plane and considering the frequently limited space of augmented reality environments. In terms of the substance of the content, legal visualizations can be dynamically adapted to the context of the user. For example, a legal visualization that explains the legal regulations in a factory environment in the form of a process diagram is dynamically adapted to show only the process parts that are relevant at the current location, e.g., in terms of health regulations. Once the user moves to another location, the content is dynamically adapted, and another process part is shown.

The interaction in augmented reality environments can be done in various ways as we showed in the previous section. Again, we distinguish between the form of interaction - i.e., for example via device-less interaction through gesture recognition or by using devices such as bats for pointing at and selecting objects in three-dimensional environments - and the substance of interaction, i.e., for example the intention of the user and the goal of the interaction such as moving an object, selecting an object, entering information etc.

In the context of legal visualizations, interaction with visualizations has so far not caught very much attention. An exception is the work by Newesely who discussed the interaction with legal visualizations for persons with limited abilities [Newesely 2012]. Investigating the interaction aspect from the viewpoint of augmented reality is therefore rather new in the area of legal informatics. As illustrated in [Bodenski 2018], augmented reality often requires completely new forms of interaction, e.g., through new devices for realizing the traditional substance of interaction.

4.

Fictitious Use Case for Applying the Framework ^

By reverting to the different dimensions of context, content and interaction, as well as form and substance, we can analyze the concrete manifestations of these dimensions in a fictitious use case. For the example, we imagine a scenario where a landlord wants to rent out an apartment. As she is not sure what legal regulations are required, she activates her AR equipment. The equipment scans the environment and embeds a legal visualization in the room. The legal visualization shows the process of calculating the rent for different types of buildings or facilities under consideration of the legal system.

For the context, the form of the context is in this example the rental of an apartment and a room in this apartment is used to visualize the legal regulations. The substance of the context is here a room, but not only the room itself, also all objects and properties of this room. This can be information such as the object wall or ceiling. Additionally, it may be inferred by the AR application that this room belongs to an apartment and that this apartment is available for rent. The form of the content is in this case a 3D-model of a legal visualization. Therefore, a model of the legal visualization is projected on a 3D plane constructed using the JavaScript library three.js. The Application loads an image file of the legal visualization and projects this image as texture on the 3D surface. The nature of the information i.e., the substance of the content is a mixture of textual and procedural information, since the visualization is a kind of process. This should enable the viewer to correctly classify and interpret the given information. The form of interaction in the AR environment will be with the Microsoft HoloLens2 and solely uses the hands of the user, i.e., it is device-less. Thereby, the virtual object can be placed in the environment by grabbing and dragging the object. In this case, pressing the index finger and thumb together is considered as start event for grasping objects. The substance of the interaction i.e., the goal of the interaction is to place the virtual object in the room in such a way that it is in a useful position for the user to observe it without obstructing or disturbing him. This rudimentary example is not about selecting single elements or extending information in the model.

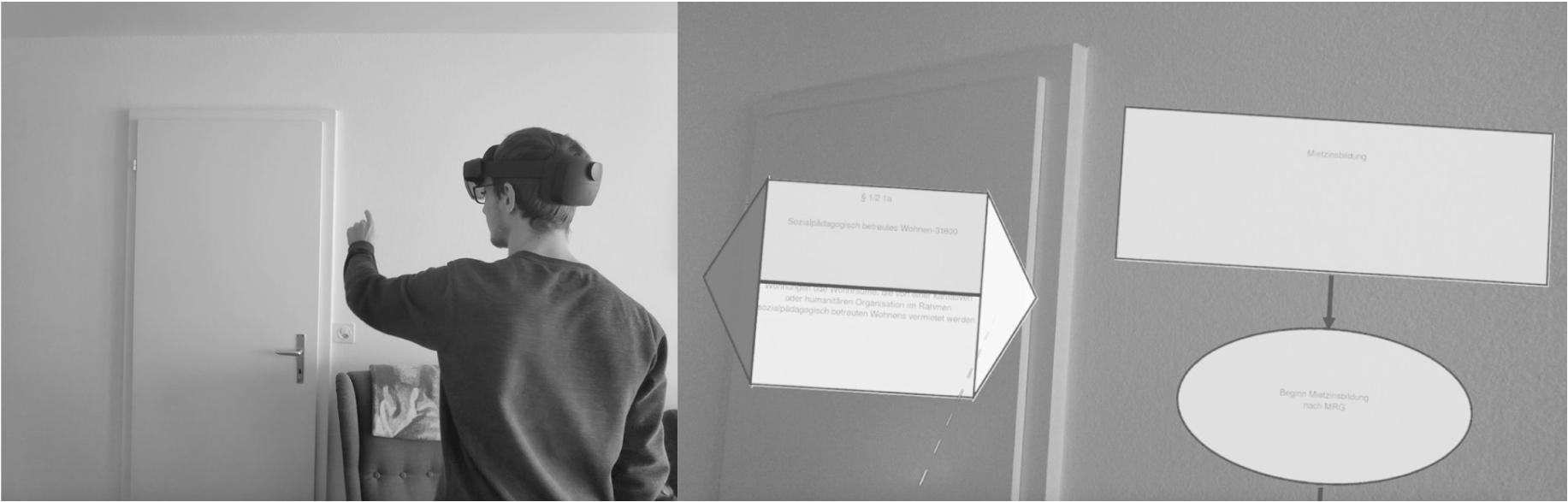

Figure 3: Example of a C.O.N.T.E.N.T visualization with a HoloLens2

To test the concept described above, a prototypical implementation of a C.O.N.T.E.N.T visualization [Kahlig/Kahlig 2015] has been realized. In this example, we use a legal visualization for calculating the rent according to law. It is derived from the implementation of the C.O.N.T.E.N.T modeling language in ADOxx [Fill/Karagiannis 2013]. Further, an instance of the modeling language i.e., the model, was implemented in a three-dimensional environment with the help of JavaScript by using the three.js framework. Distributed on a web server, the visual representation of the model could be embedded and viewed in the real world using a HoloLens26. Figure 3 shows how this example looks like in a first implementation.

5.

Conclusion and Next Steps ^

Applications in the field of legal visualization have great potential in the area of augmented reality. Up to now, visualizations could only be clearly represented by large posters or 2D representations on computer screens. The use of augmented reality opens a new field of application, which is already widespread in many other areas. In a simple version, like the example, which was implemented here, no major problems arise, since the application is relatively simple. But it must be considered that in more complex cases, e.g., a more complex interaction activity, new questions arise, e.g., for inferring information on the context and adapting the content. Furthermore, it would be desirable to capture the context substance in different cases and thus always have the right information available.

Another area of application that could be simplified by using AR in combination with legal visualizations is the modeling of such visualizations. If it can be made easy and intuitive for the creator of a legal visualization to create a representation of a legal framework, many more experts in legal areas could document their knowledge and the embedding of legal visualization in work practices could gain importance.

6.

Literature ^

- Bettina Mielke/Caroline Walser Kessel/Christian Wolff, 20 Jahre Rechtsvisualisierung – Bestandsaufnahme und Storytelling, in: Jusletter IT 23. Februar 2017

- Feng Zhou/Henry Been-Lirn Duh/Mark Billinghurst, Trends in Augmented Reality Tracking, Interaction and Display: A Re-view of Ten Years of ISMAR 2008, 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, IEEE, 2008, pp. 193–202, doi:10.1109/ISMAR.2008.4637362.

- Fill, Hans-Georg/Dimitris Karagiannis. On the Conceptualisation of Modelling Methods Using the ADOxx Meta Modelling Platform, Enterprise Modelling and Information Systems Architectures, vol. 8, no. 1, 2013, pp. 4–25, doi:10.1007/BF03345926.

- Georg Newesely, Beeinträchtigung der Verarbeitung von Rechtsvisualisierungen bei neurologisch bedingten Störungen der höheren visuellen Funktionen, in: Jusletter IT 29. Februar 2012

- Hans-Georg Fill/Andreas Grieb, Visuelle Modellierung des Rechts: Vorgehensweise und Praktische Umsetzung für Rechtsexperten, in: Jusletter IT 23. Februar 2017

- Hans-Georg Fill/Katharina Haiden, Visuelle Modellierung für rechtsberatende Berufe am Beispiel der gesetzlichen Erbfolge, in: Jusletter IT 25. Februar 2016

- Hans-Georg Fill, On the Technical Realization of Legal Visualizations, in: Jusletter IT 22. Februar 2007

- Helena Haapio, A Visual Approach to Commercial Contracts, in: Jusletter IT 24. Februar 2011

- Hugues, Olivier/Philippe Fuchs/Olivier Nannipieri, New Augmented Reality Taxonomy: Technologies and Features of Augmented Environment, Handbook of Augmented Reality, edited by Borko Furht, Springer New York, 2011, pp. 47–63, doi:10.1007/978-1-4614-0064-6_2.

- Marcel Heddier/Ralf Knackstedt, Herausforderungen der Rechtsvisualisierung aus Perspektive der Wirtschaftsinformatik, in: Jusletter IT 29. Februar 2012

- Oberhauser, Roy/Camil Pogolski. VR-EA: Virtual Reality Visualization of Enterprise Architecture Mod-els with ArchiMate and BPMN, Business Modeling and Software Design, edited by Boris Shishkov, vol. 356, Spring-er International Publishing, 2019, pp. 170–87, doi:10.1007/978-3-030-24854-3_11.

- Ronald Azuma, Survey of Augmented Reality, p. 48.

- Stefan Bodenski, Erweitert «Augmented Reality» den Blick auf den Justizarbeitsplatz der Zukunft?, in: Jusletter IT 4. Dezember 2018

- Van Krevelen, Rick/Ronald Poelman, A Survey of Augmented Reality Technolo-gies, Applications and Limitations, International Journal of Virtual Reality, vol. 9, no. 2, Jan. 2010, pp. 1–20, doi:10.20870/IJVR.2010.9.2.2767.

- Wolfgang Kahlig/Eleonora Kahlig, Rechtsvisualisierung – Viribus Unitis – mit C.O.N.T.E.N.T., in: Jusletter IT 26. Februar 2015

- Wolfgang Kahlig, Regelstrukturierungsverfahren für legistische Problemstellungen, in: Jusletter IT 11. September 2014

- 1 https://docs.unity3d.com/Manual/index.html (visited on: 06.11.2020).

- 2 https://docs.unrealengine.com/en-US/index.html (visited on: 06.11.2020).

- 3 https://www.khronos.org/registry/OpenXR/#apispecs (visited on: 06.11.2020).

- 4 https://developer.mozilla.org/en-US/docs/Web/API/WebGL_API (visited on: 09.11.2020).

- 5 https://threejs.org/docs/ (visited on: 06.11.2020).

- 6 https://www.microsoft.com/en-us/hololens (visited on: 06.11.2020).