For years the European Commission has fostered EU-wide cooperation in the area of AI to increase European competitiveness and strived to ensure trust in the sector. In the year 2018, the European Strategy on AI “Artificial Intelligence for Europe” was published.2 At the centre of the strategy was the following premise: “Artificial intelligence (AI) is already part of our lives – it is not science fiction.”3 Artificial intelligence was no longer a figment of the imagination but had in fact become reality. And while the technology promises many opportunities, it is also accompanied with a variety of novel risks to fundamental freedoms.4 Hence it followed, that said risks need to be addressed within the legal framework. Even though the issues had already been researched for decades, the process of the creation of legal norms addressing said issues was still in its infancy. Soon after the High-Level Expert Group on AI published the Guidelines for trustworthy AI as a first step to sketch out requirements for artificial intelligence in the European Union.5 Furthermore, as a result of the strategy, a coordinated plan for AI “Made in Europe” was instated. As a major milestone, the European Commission presented the White Book on AI in the year 2020.6 The White Book ultimately served as a basis for the new proposal for an EU-regulation on artificial intelligence.

The objective of the regulation is to lay down harmonised rules on artificial intelligence. It aims to provide clear requirements for all stakeholders while alleviating financial burdens, to guarantee safety and fundamental rights and to increase investment and innovation.7 The proposal follows a risk-based approach to AI-regulation8, meaning that artificial intelligence systems will have to adhere to different requirements proportional to the risk they pose. Insofar as a system will be considered “high-risk”, the artificial intelligence will have to be compliant with the requirements on human oversight set out in Article 14 of the regulation. According to article 14 par. 4 lit (b) these requirements include the obligation to be aware of a so-called “automation bias”, which will be the focal point of the following analysis. The paper aims to give a short overview over the relevant parts of new proposal and to clarify what constitutes an “automation bias”. The examination shall highlight the difficult compliance with this seemingly small requirement and propose possible solutions.

2.

Brief Overview: Proposal for the Regulation on AI ^

As illustrated above, the process of the development of a legal framework on artificial intelligence has resulted in the proposal of the “Artificial Intelligence Act”. The regulation will address AI-specific risks, categorize systems according to their risk potential and set requirements for said systems.9 Furthermore it will regulate conformity assessments as well as enforcement and governance on a European and national level. The act consists of the following segments:

Title I concerns the scope and definitions of the regulation. The European Commission opted for a broad scope with a technology neutral and future proof delimitation.10 The obvious aim seems to be to encapsulate as many variants of artificial intelligence as possible while providing legal certainty. This of course constitutes a challenging task due to the notorious difficulty of defining artificial intelligence.11 Additionally, a definition of key stakeholders in the AI value chain is provided.

Title II defines a list of prohibited artificial intelligence practices, which are deemed to be too risky or in contrast to European values to employ. Such prohibited practices include manipulation, exploitation or harm to one’s body or psyche. Furthermore, specific applications such as general-purpose social scoring by public authorities are prohibited.12

Title III, which will be at the centre of the analysis, deals with the classification of AI-systems in chapter 1 and sets out specific rules for high-risk systems in chapter 2 and 3.13 Chapter 4 details the framework for notified bodies, who will act as third parties for conformity assessments, which is detailed in Chapter 5. For AI-systems which are not part of another product (“stand-alone AI systems”) a new compliance and enforcement system will be created, while the aforementioned will follow existing assessment systems.

The remaining titles will only be mentioned briefly to ensure completeness. While Title IV contains transparency obligations for certain AI systems, Title V deals with measures in support of innovation.14 Title VI, VII and VIII concern governance and implementation. Title IX creates a legal basis for the creation of codes of conduct and titles X, XI and XII include final provisions to the regulations.

3.

Scope of the Provision ^

Only in the case, that a system is classified as high-risk, it must comply with the requirements set out in Article 14 of the proposal on human oversight and consequently on automation biases.

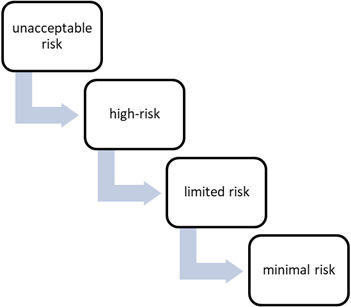

To determine under which category a system falls, the examination steps highlighted in Graph 1 must be undertaken. Firstly, it must be determined whether a system constitutes an unacceptable risk according to Article 5 of the proposal. This can be the case if the technology is specifically prohibited, such as real-time remote biometric identification systems for the purposes of law enforcement (with some exceptions)15. The unacceptable risk can also result from the employment of specific practices such as the exploitation of vulnerabilities of certain groups.16 Should the artificial intelligence system in question not fall within Article 5 and be determined to constitute an unacceptable risk, it may be classified as a high-risk system. According to article 6, a system shall be considered high-risk if it is intended to be used as a safety component of a product or as product, which is covered by specific legislation of the European Union mentioned in Annex II and has to undergo a third-party conformity assessment.17 Otherwise an artificial intelligence system may also be considered high risk, if the system is used in one of the areas enlisted in Annex III. These areas inter alia include education, management of critical infrastructure, education, access to private and public services and benefits, law enforcement or migration.

Graph 1: Examination of Risk

Should a system not fall under any of those categories, it is still possible that it is determined to be of limited risk. Such is the case, if for example, an AI system is intended to interact with natural persons or to detect emotion.18 Otherwise the AI system will be considered “minimal risk”.

According to article 8 of the proposal, only high-risk AI systems must comply with the requirements set out in Chapter 2. Such requirements include the implementation of a risk-management systems19, requirements on data governance20, technical documentation21 and record-keeping22, transparency23, human oversight24 as well as accuracy, robustness and cybersecurity.25

4.

Human Oversight according to Article 14 ^

The proposal requires high-risk AI systems to be designed in such a way, that they can be effectively overseen by a natural person.26 The aim of this requirement is to prevent and minimise risks and is in line with the proposed idea of “human-centric artificial intelligence”. Human oversight can either be built into the AI systems27 or identified by the provider and implemented by the user.28 Natural persons assigned to oversee the system must understand the system and its limits29, be able to interpret outputs correctly, be able to override or disregard the output30 and to stop the system as a whole.31

While the premise seems simple enough, the proposal even highlights one key problem to human oversight: According to article 14 par 5 lit (b) the natural person must remain aware of automation bias, especially for decision recommendation systems. The term is explained in the article itself, according to which an automation bias is “the possible tendency of automatically relying or over-relying on the output produced by a high-risk AI system”. The term is only mentioned once in the whole document. The accompanying Study to Support an Impact Assessment of Regulatory Requirements for Artificial Intelligence in Europe32 only mentions the term three times and only in the section on compliance costs. No detailed explanations or requirements on this topic are provided.

5.

Automation Bias in other Fields ^

While the issue of automation bias is studied in various research fields, the literature on it is severely fragmented.33 The sectors, which are mainly concerned with the issue are aviation and health care.34 The key problem is that decision support systems do not only support the decision-maker but can also change his or her behaviour in unintended ways.35 A definition of the issue is provided in an article from the American Institute of Aeronautics and Astronautics. According to the article, automation bias occurs “[...] when a human decision maker disregards or does not search for contradictory information in light of a computer-generated solution which is accepted as correct. Operators are likely to turn over decision processes to automation as much as possible due to a cognitive conservation phenomenon, and teams of people, as well as individuals, are susceptible to automation bias”.36 The article separates the result of the bias into two actions: commission and omission. This means, that the resulting error can either occur, because the natural person fails to notice problems due to the lack of an alert by the system, or because the natural person follows the incorrect decision of the support system.37 It should also be mentioned, however, that the definition of the term automation bias and its overlap with the term “complacency bias” remain a subject of academic dispute.38

5.1.

Influencing Factors ^

Although the problem has been recognized for a long time, comprehensive and holistic studies of the phenomenon are rare. A decisive step to create a model on the awareness on sociotechnical risks stemming from AI-automation was undertaken in 2021 by Stefan Strauß.39 In his model, he describes the main influencing factors of deep automation bias40 and splits them into two categories: technical factors and human factors. According to his model, the following influencing factors can be identified:

| System Behaviour | User practice |

| Usability | User skills |

| Data model quality & ML performance | Resources & practical experience |

| Accountability & scrutiny options | Problem awareness |

Table 1: Strauß’ Model

6.

“Awareness” as Legal Requirement ^

The model clearly demonstrates that problem awareness is just one of the influencing factors. Yet, article 14 par 4 lit (b) of the proposal states, that the natural person tasked with overseeing the system must simply remain “aware” of automation bias. The wording may of course still change in the future. But for now, the question arises, if awareness is the only obligation article 14 of the proposal entails. When interpreting the article, one could conclude, that in order to comply with the requirement, it would suffice to just make the person aware, that such biases exist. But the requirement must be assessed in the light of article 14 of the proposal.

As the review above has shown, the bias can lead to errors in omission and commission. If an act is committed due to the overreliance on the output of the AI-system, the human person overseeing the system was not able to “decide, in any particular situation, not to use the high-risk AI system or otherwise disregard, override or reverse the output of the high-risk AI system”41. Therefore, one of the obligations under Art 14 of the proposal would be violated. Likewise, if the overreliance on the system results in an omission by the human, said person most likely did not have a full picture of the capacities and limitations of the system or potential dysfunctions.42

Following this logic, the argument could be made, that Art 14 par 4 lit (b) does not add much content to the concept of human oversight. Rather it would already be contained in other legal obligations. But, as the model mentioned above and the solutions mentioned below demonstrate, awareness is a key factor in dealing with automation bias. Additionally, one must keep in mind, that Art 14 of the proposal is first and foremost a compliance requirement that includes technical and organisational measures – as insinuated in the supporting study.43 Similar to the system established in the General Data Protection Regulation (GDPR), it is up to those responsible to demonstrate compliance. Upon request of a national authority, a provider must show their conformity with the requirements.44 Similar obligations apply to importers45 and distributers46. Therefore, the responsible entity must produce documentation to demonstrate that appropriate steps have been taken to raise awareness. The other side of the obligation is the competency of the relevant authority to specifically investigate whether measures have been instated.

6.1.

National Case Law on Overreliance ^

While this might seem like a minor detail, past case law has highlighted that this requirement is essential for authorities. Naturally, legal precedent specific to this article does not exist yet. However, a similar problem concerning legal requirements under the GDPR was brought to an Austrian administrative court. The national data protection authority tried to demonstrate the overreliance of administrative staff on an automated assessment.47 The authority argued, that Art 22 GDPR should apply, since the decision-making process could be considered fully automated.48 The authority based their argument mostly on time spent by administrative staff on the evaluation of the output. The argumentation was rejected by the court and the case is currently pending at the Higher Administrative Court. The case demonstrates, however, the difficulty of an authority to prove overreliance on machine output. Bias occurs in the mind of the staff and proof could most likely only be provided by extensive and expensive studies over time. By switching the burden of proof and forcing the responsible entity to demonstrate compliance with this specific requirement, supervision by the authority is facilitated.

7.

Compliance Solutions ^

For entities employing AI-systems, the main question will be how to deal with automation bias. Finding solutions is not a trivial task. The fact, that automation bias must be dealt with is mentioned regularly. But much less frequently actual solutions are proposed.49 However, a review of freely available literature on solutions to automation biases revealed the following technical and organisational measures to counter the effects of automation bias. As Strauß’ model demonstrates, influencing factors can be split into two main categories. Hence it seems appropriate to split solutions into two segments: design choices and human resources requirements. It should be noted that proposed solutions may not equally apply to all respective systems.

7.1.

Design choices ^

Screen Design

According to a review by Kate Goddard, Abdul Roudsari and Jeremy C Wyatt, screen design can be an influencing factor in automation bias. Incorrect advice is more likely to be followed, the more prominently the recommendation of a decision support system is displayed on a screen. Similarly, automation bias increases with the amount of detail shown on screen. Thus, automation bias may be decreased by reducing prominence and detail of an automated recommendation.50

Language

Additionally, the study highlights the role of language in automation bias. Bias can be reduced by relying less on imperative language such as commands and instead focusing on providing supportive information on an issue.51

System Confidence Information

Another way of decreasing bias is to provide system confidence information. This entails not only providing the decision recommendation itself, but also a confidence value alongside the information. Studies were mostly conducted in the area of aviation.52

7.2.

Human Resources Requirements ^

Error Training

Automation bias affects unexperienced users as well as experts.53 But a study conducted by the Berlin Institute of Technology demonstrated that experiences with failures of automation have a stronger effect on trust of an individual in the system than correct recommendations.54 This insight suggests that deliberate error training may be an effective solution to automation bias.55

Awareness of Reasoning

Furthermore, automation bias may be reduced by making the user aware of the reasoning process of a decision support system.56

Team vs Individuals

A study published in the international journal of aviation psychology examined the performance of two-person teams versus individuals in regard to automation bias. The study found that there was no significant difference between teams and individuals.57 The study did however confirm awareness of possible errors can significantly decrease results of automation bias.

Pressure and constraints

The aforementioned study by Kate Goddard et alia also demonstrated, that environmental factors can increase automation bias. Complexity of a task and time pressure of the decision-maker are factors that must be accounted for when dealing with automation bias.58 Reducing workload may also decrease the potential for automation bias.

The enlisted bullet points demonstrate that dealing with automation bias is not a one-dimensional task. The problem stems from multiple sources. Those responsible must not only consider the design of a system but rather the whole environment in which it is used. Countermeasures must include technical as well as organisational solutions.

8.

Conclusion ^

The European Commission has undertaken an enormous step towards building a first framework for AI in the European Union. In the proposal, automation bias is rightfully recognized as a problem with automation, especially decision support systems. While the obligation to create awareness for automation bias is only mentioned briefly, it will likely entail a plethora of compliance obligations for providers and users of AI-systems. However, it is uncertain, what measures the individual user must implement to achieve compliance. Even though efforts to study the phenomenon and its solutions were increased, further research into the issue is necessary. The literature review has demonstrated that ideas for solutions are already available. But tests must be conducted to assess their usefulness in a practical setting. Additionally, the creation of guidelines on how to deal with automation bias seems desirable to achieve a level of legal certainty.

9.

Acknowledgments ^

The project is being carried out by the Legal Informatics Working Group, Juridicum, University of Vienna, under the direction of Prof. Dr. Dr. Erich Schweighofer. The authors gratefully acknowledge essential support and important comments.

10.

Literature ^

Cummings, M.L., Automation Bias in Intelligent Time Critical Decision Support Systems, https://doi.org/10.2514/6.2004-6313 (accessed on 30.10.2021), 2012.

Dzindolet, Mary T./Peterson, Scott A./Pomranky, Regina A./Pierce, Linda G./Beck, Hall P., The role of trust in automation reliance, International Journal of Human-Computer Studies, 2003, Volume 58, Issue 6, pp. 697–718.

DEK, Gutachten der Datenethikkommission. https://www.bmi.bund.de/SharedDocs/downloads/DE/publikationen/themen/it-digitalpolitik/gutachten-datenethikkommission.pdf?__blob=publicationFile&v=4 (accessed on 30.10.2021) p. 166.

Goddard, Kate/Roudsari, Abdul/Wyatt, Jeremy C., Automation Bias: A Systemic Review of Frequency, Effect Mediators, and Mitigators, Journal of the American Medical Informatics Association, 2012, Volume 19, Issue 1, pp. 121–127.

Lyell, David/Coiera, Enrico, Automation bias and verification complexity: a systematic review, Journal of the American Medical Informatics Association, 2017, Volume 24, Issue 2, pp. 423–431.

Parasuraman, Raja/Manzey, Dietrich, Complacency and Bias in Human Use of Automation: An Attentional Integration, Human Factors – The Journal of the Human Factors and Ergonomics Society, Volume 52, Issue 3, pp. 381–410.

Pfister, Jonas, Verantwortungsbewusste Digitalisierung am Beispiel des ‚AMS-Algorithmus‘ in Schweighofer, Erich/Hötzendorfer, Walter/Kummer, Franz/Saarenpää, Ahti (Eds.), IRIS 2020 – Proceedings of the 23rd International Legal Informatics Symposium, pp. 405–411.

Reichenbach, Juliane/Onnasch, Linda/Manzey Dietrich, Misuse of automation: The impact of system experience on complacency and automation bias in interaction with automated aids, in: Proceedings of the Human Factors and Ergonomics Society 54th annual meeting, 2010, pp. 374–378.

Russell, Stuart/Norvig, Peter, Künstliche Intelligenz – ein moderner Ansatz, 3rd edition, Pearson, München 2012.

Skitka, Linda J./Mosier, Kathleen L./Burdick, Mark/Rosenblatt, Bonnie, Automation Bias and Errors: Are Crews Better Than Individuals?, The International Journal of Aviation Psychology, 2000, Volume 10, Issue 1, pp. 85–97.

Strauss, Stefan, Deep Automation Bias: How to Tackle a Wicked Problem of AI?, Big Data and Cognitive Computing, 2021, Volume 5, Issue 2, p. 18.

Strauss, Stefan, From Big Data to Deep Learning: A Leap towards Strong AI or ‘Intelligentia Obscura’? Big Data and Cognitive Computing, 2018, Volume 2, Issue 3, p. 16.

- 1 COM (EU), Proposal for a Regulation of the European Parliament and of the Council laying down harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union Legislative Acts, COM (2021) 206 final, p. 2.

- 2 COM (EU), Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of Regions, Artificial Intelligence for Europe, COM (2018) 237 final.

- 3 COM (EU), Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of Regions, Artificial Intelligence for Europe, COM (2018) 237 final, p. 1.

- 4 COM (EU), Study to Support an Impact Assessment of Regulatory Requirements for Artificial Intelligence in Europe, Final Report (D5), p. 7.

- 5 COM (EU), Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of Regions, Building Trust in Human-Centric Artificial Intelligence, COM (2019) 168 final, p. 4.

- 6 COM (EU), Whitebook on Artificial Intelligence, COM (2020) 65 final.

- 7 COM (EU), Regulatory framework proposal on artificial intelligence, https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai (accessed on 30. October 2021), 2021.

- 8 COM (EU), Proposal for an Artificial Intelligence Act, p. 2.

- 9 COM (EU), Regulatory framework proposal on artificial intelligence, https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai (accessed on 30. October 2021), 2021.

- 10 COM (EU), Proposal for an Artificial Intelligence Act, p. 12.

- 11 Compare to RUSSELL /NORVIG, Künstliche Intelligenz – ein moderner Ansatz3, PEARSON, München 2012, p. 22.

- 12 COM (EU), Proposal for an Artificial Intelligence Act, p. 13.

- 13 COM (EU), Proposal for an Artificial Intelligence Act, p. 13.

- 14 COM (EU), Proposal for an Artificial Intelligence Act, p. 14.

- 15 Art 5 par 2 Proposal for an Artificial Intelligence Act.

- 16 Art 5 par 1 lit b Proposal for an Artificial Intelligence Act.

- 17 Art 6 par 1 Proposal for an Artificial Intelligence Act.

- 18 Art 52 Proposal for an Artificial Intelligence Act.

- 19 Art 9 Proposal for an Artificial Intelligence Act.

- 20 Art 10 Proposal for an Artificial Intelligence Act.

- 21 Art 11 Proposal for an Artificial Intelligence Act.

- 22 Art 12 Proposal for an Artificial Intelligence Act.

- 23 Art 13 Proposal for an Artificial Intelligence Act.

- 24 Art 14 Proposal for an Artificial Intelligence Act.

- 25 Art 15 Proposal for an Artificial Intelligence Act.

- 26 Art 14 par 1 Proposal for an Artificial Intelligence Act.

- 27 Art 14 par 3 lit a Proposal for an Artificial Intelligence Act.

- 28 Art 14 par 3 lit b Proposal for an Artificial Intelligence Act

- 29 Art 14 par 4 lit a Proposal for an Artificial Intelligence Act.

- 30 Art 14 par 4 lit c & d Proposal for an Artificial Intelligence Act.

- 31 Art 14 par 4 lit Proposal for an Artificial Intelligence Act.

- 32 COM (EU), Study to Support an Impact Assessment of Regulatory Requirements for Artificial Intelligence in Europe, Final Report (D5).

- 33 Lyell Coiera, Automation bias and verification complexity: a systematic review, Journal of the American Medical Informatics Association, Volume 24, Issue 2, March 2017, p. 430.

- 34 Parasuraman/Manzey, Complacency and Bias in Human Use of Automation: An Attentional Integration, Human Factors – The Journal of the Human Factors and Ergonomics Society, Volume 52, Issue 3, pp. 381–410.

- 35 Ibid.

- 36 Cummings, Automation Bias in Intelligent Time Critical Decision Support Systems, https://doi.org/10.2514/6.2004-6313 (accessed on 30.10.2021), 2012, p. 2.

- 37 Ibid.

- 38 Lyell/Coiera, Automation bias and verification complexity: a systematic review, Journal of the American Medical Informatics Association, 2017, Volume 24, Issue 2, p. 423–431.

- 39 Strauss, Deep Automation Bias: How to Tackle a Wicked Problem of AI? Big Data and Cognitive Computing, 2021, Volume 5, Issue 2, p. 18.

- 40 Strauss, From Big Data to Deep Learning: A Leap towards Strong AI or ‘Intelligentia Obscura’? Big Data and Cognitive Computing, 2018, Volume 2, Issue 3, p. 16.

- 41 Art 14 par 4 lit d Proposal for an Artificial Intelligence Act.

- 42 Art 14 par 4 lit a Proposal for an Artificial Intelligence Act.

- 43 COM (EU), Study to Support an Impact Assessment of Regulatory Requirements for Artificial Intelligence in Europe, Final Report (D5), p. 132.

- 44 Art 16 lit j, Art 23 Proposal for an Artificial Intelligence Act.

- 45 Art 26 par 5 Proposal for an Artificial Intelligence Act.

- 46 Art 27 par 5 Proposal for an Artificial Intelligence Act.

- 47 DSB 213.1020 2020-0.513.605; https://www.derstandard.at/story/2000122684131/gericht-macht-weg-fuer-umstrittenen-ams-algorithmus-frei.

- 48 See also Pfister, Verantwortungsbewusste Digitalisierung am Beispiel des ‚AMS-Algorithmus‘ in SCHWEIGHOFER/HÖTZENDORFER/KUMMER/SAARENPÄÄ (Eds.), IRIS 2020 – Proceedings of the 23rd International Legal Informatics Symposium, pp. 405–411.

- 49 Compare p.e. DEK, Gutachten der Datenethikkommission. https://www.bmi.bund.de/SharedDocs/downloads/DE/publikationen/themen/it-digitalpolitik/gutachten-datenethikkommission.pdf?__blob=publicationFile&v=4 (accessed on 30.10.2021) p. 166.

- 50 Goddard/Roudsari/Wyatt, Automation Bias: A Systemic Review of Frequency, Effect Mediators, and Mitigators, Journal of the American Medical Informatics Association, 2012, Volume 19, Issue 1, p. 125.

- 51 See also Parasuraman/Manzey, Complacency and Bias in Human Use of Automation: An Attentional Integration, Human Factors – The Journal of the Human Factors and Ergonomics Society, 2010, Volume 52, Issue 3, p. 396.

- 52 Ibidem.

- 53 Parasuraman/Manzey, Complacency and Bias in Human Use of Automation: An Attentional Integration, Human Factors – The Journal of the Human Factors and Ergonomics Society, 2010, Volume 52, Issue 3, p. 381.

- 54 Reichenbach/Onnasch/Manzey, Misuse of automation: The impact of system experience on complacency and automation bias in interaction with automated aids, in: Proceedings of the Human Factors and Ergonomics Society 54th annual meeting, 2010, p. 375.

- 55 See also Skitka/Mosier/Burdick/Rosenblatt, Automation Bias and Errors: Are Crews Better Than Individuals?, The International Journal of Aviation Psychology, 2000, Volume 10, Issue 1, p. 96.

- 56 Dzindolet/Peterson/Pomranky/Pierce/Beck, The role of trust in automation reliance, International Journal of Human-Computer Studies, 2003, Volume 58, Issue 6, pp. 697–718.

- 57 Skitka/Mosier/Burdick/Rosenblatt, Automation Bias and Errors: Are Crews Better Than Individuals?, The International Journal of Aviation Psychology, 2000, Volume 10, Issue 1, p. 95.

- 58 Goddard/Roudsari/Wyatt, Automation Bias: A Systemic Review of Frequency, Effect Mediators, and Mitigators, Journal of the American Medical Informatics Association, 2012, Volume 19, Issue 1, p. 125.