Law is an artefact, or a technology that, like many others, is an expression of human nature and, as such, has evolved throughout the course of the existence of the human genre. Like other current technologies – computer science and genetics, in particular – it has undergone an exponential acceleration in recent years. Therefore, even in the legal realm, arises a fundamental question which can be tackled from a twofold perspective, theoretical and practical: how the interaction between technologies and human beings can be properly designed. This is not an effortless challenge because, since different technologies are combined in the market uptake, it is difficult to evaluate the social impact of each one.

1.1.

100 years of legal thought, 25 years of IRIS: between history and historicism ^

Over the last century, legal thought has consistently re-shaped and intertwined the three main traditional narratives based on the concepts of ethical value, formal rule, or social practice. In the first sense, an example could be found in John Finnis, who reframed the human rights as a set of principles engraved in the natural order of Being2; of the second perspective, we can remember Hans Kelsen, who famously proposed a ‘pure’ theory of law, formulated by separating from the legal content the logical fabric of the law, divided between prescription and sanction3; in a third way, it is noticeable Eugen Ehrlick with his idea of law as a direct expression of the interests embodied in social ties4.

Fifty years ago, legal scholars adopted the theoretical model of cybernetics5. According to this perspective, the idea of ‘autopoiesis’ has been conjugated to describe the social interactions involving both legal system6 and constitutional interpretation7. This approach determined a consistent leap from the tradition, being based on the abstract concept of “information”, which, since then, has flooded in many different social sciences8.

More recently, 25 years ago – for the sake of celebration, let’s say, at the beginning of the IRIS-era – a further step was made, due to the widespread deployment of ICTs. In the well-known RENO/ACLU ruling of 19979, the US Supreme Court qualified the Internet as “a wholly new media”, uncapping Pandora’s box of claims for the redefinition of traditional legal categories in such a context. This has been the wake of cyberlaw, an historical moment when the capacity of ICTs to enable and to regulate forms of aggregation ignited discussion among different perspectives. Some claimed that “virtual communities” were to be considered a new kind of society, technology itself being an intrinsic normative force – here comes the motto “code is law”10 – while others qualified technology as an irrepressible component of every legal provision – the “lex informatica” – even outside virtual communities11. Lastly, there were those who fought against these new ideas, perpetuating traditional beliefs12.

Today, the advent of Distributed Ledger Technologies – with the vision of the “lex cryptographia”13 – has confirmed that it is possible to explore the legal realm towards new territory where society and technology are not opposed, but integrated. According to this view it can be argued that law is no longer based only on trusted human relations14, but also on trust – less “rough consensus”, since it relies directly on how technologies are engineered, deployed, and used. Consequently, legal regulation becomes a matter of design both in a social and in a technological sense.

1.2.

Technological design and human-machine interaction: the problem of “request to intervene” ^

In general terms, the close relationship – or better, the overlap – between law and technology requires reframing the concept of law, and the abovementioned narratives qualifying legal regulation in terms of ethical values, formal rules, and social practises. This applies both at a collective or even global level – as a matter of institutional governance, it could be said – and at an individual level, in the interaction of human beings with their technological devices. Such issues seem urgent when related to artificial intelligence (henceforth, AI), whose social impact is of enormous scale and with unpredictable consequences15.

In this contribution we address the topic of technological design of human interaction with AI, providing an up-to-date overview focused on the legal issues concerning the “request to intervene”. The first part describes the ideal context in which the discussion takes place – the role of technological design in the relationship between values, rules, and practises – and the different theories on ethics of technology, mainly Value Sensitive Design (VSD) and Responsible Research and Innovation (RRI). In the second part we discuss the legitimacy and the conditions under which the control of a system can be transferred from individuals to artificial agents, and back. In the third part, previous conclusions are put into the context of “industry 4.0” to test their validity.

2.

The central role of technological design ^

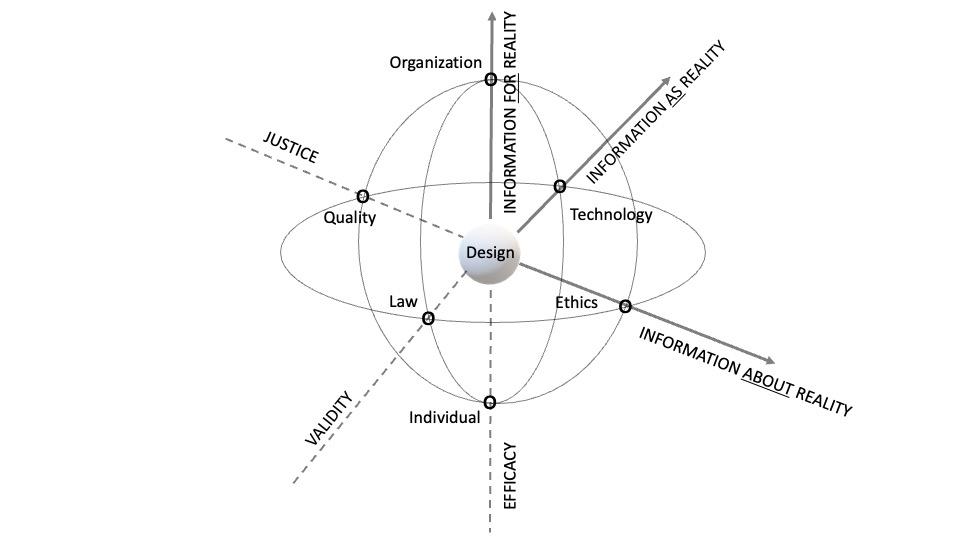

The tripartite narrative of legal thought can be reformulated in the light of the recent “Philosophy of Information”16, which puts forward cybernetics’ achievements. In this sense, it is useful to take up the three different conjugations of information, “information as reality”, “information about reality” and “information for reality” – which stemmed from previous studies17 – and associate the first with the formal validity of the rules, the second with the values contained in them and the third with their social effectiveness.

Consequently, we can draw a conceptual model in which each of these versions of the concept of “information” corresponds to a specific dimension. To the vector named “information as reality”, which concerns in general the formal validity of rules, we can place law and technology. This represents how these two components integrate each other in determining the way in which masses and individuals are conditioned.

On the level of ‘information about reality’, i.e. ethical values, we can place ethics – obviously – but also to the industrial standards (ISO standards, technological certifications, quality marks) in which technological design incorporates values in order to operationalize them. This applies, as far as our contribution is concerned, to the future European “AI Law”18, according to which the ethicality of an algorithm will not be a purely substantive issue, but a procedural one, since it will have to be subject not only to a validation process before being introduced in the market, but also to constant monitoring during its use.

On the level of “information for reality”, i.e. effectiveness, it is placed the relationship between the individual and the social group (family, workplace, or, in general, the legal system to which one belongs). This is where the problem of the political legitimacy of public institutions arises and operates the inner drive motivating individuals to abide with social rules. In the light of “philosophy of information”, law is configured here as “information for reality”, since it is essentially conceived as a command or a set of practical instructions.

Technological design can be placed at the intersection of these three vectors. Indeed, any artefact, whether tangible or intangible, is not neutral, but reflects values, rules, and social practises in each context. Design, in this sense, can be qualified as an expression of human creativity limited by a set of variables defined in a higher level of complexity19.

Figure 1: Law, technological design and information

To define how technological design can be implemented, it is crucial to shed light on the twenty-years-long discussion concerning the concrete ways of incorporating values into the use of artefacts20. On this regard it is noteworthy that the best-known methodologies, such as Value Sensitive Design (VSD)21 and Responsible Research and Innovation (RRI)22, have recently been further developed to include AI technologies23. Despite the results accomplished by these approaches in drawing a consistent methodology, experts raise several concerns, the most relevant of which are the risk of arbitrariness in the choice of values to be axiomatized24, the difficulty of translating the proposed requirements into practice, them being formulated in a too general and abstract a manner, the need to distinguish “engineering ethics” and “machine ethics”, and the consequent disputable qualification of AI as a moral agent only in the presence of true autonomy25. In the background of this debate is placed the main concern of AI governance, i.e. the consideration of the technological impact from an ethical, political and social point of view, which therefore concerns not only labour law and the protection of personal data, but also other disciplines such as consumer law and competition law26.

3.

Technological design and the problem of the “request to intervene” ^

The “request to intervene” is a fundamental concept in the interaction between autonomous systems and humans, which is located at level 3 of autonomy as defined both by Levels of Human Control Abstraction (LHCA)27 and by SAE28. Despite the general agreement on this assumption, currently there is a lively discussion concerning how it can be implemented whenever it involves a decision requiring a trade-off between values, especially if includes human physical integrity. In this section, we focus on three aspects: (1) the design of the interface, (2) the training required to the human operator for a risk-free interaction and (3) the allocation of responsibility.

Concerning the first aspect, it has to be clarified that the “request to intervene” comes with an autonomous system. More precisely, it depends on the inability of the system to manage events outside its Operational Design Domain (ODD). Therefore, due to this incapacity, an intervention by an external agent – the human controller, in this case – is required to generate the interaction with the environment. In this sense, the first design choice is therefore the one that leads to the fundamental setting of the ODD. This choice defines the basic setting and profoundly influences structural and functional requirements of the system, hence its working conditions. More precisely, ODD is a combination of several design choices: (1) social and political factors, including social acceptance and trust in technologies29 , (2) legal requirements and (3) engineering standards. This synthesis encompasses both the degree of automation (“how much” automation is expected) and the processes to be automated (“what” to automate).

The second aspect, namely the training of the human operator, is deeply connected to the first. Only after a thorough analysis of the interaction of the device with its environment and the development of an efficient interface, can a human operator be trained to handle the request for intervention. Therefore, training can be considered as a continuous process of adaptation of the human to the machine aimed at balancing a task with its intrinsic level of risk, provided that a risk-free scenario is impossible to achieve. In this sense, the learning process30 needs to be balanced balance with what is called “deskilling” which is related to an excess of trust in the autonomous system31. In this respect, legal and technical design play a key role, since they not only have to establish the ODD, as seen above, but also how the human is expected to cope with the transmission of authority on the processes controlled32. Specifically, they determine: (1) how the handover has to be done, (2) what training the human controller has to receive, (3) the trade-off among many interests and values at stake.

The third aspect regards the liability in the “request of intervene” in the occurrence of failures of the system, commonly due to “bugs”, human error, or both. Anyway, of course it can be said that risks are a by-product of every human activity, yet it is possible to separate “bugs” or errors that are unavoidable from those who are not, and therefore find grounds suitable to assing liability.

Therefore, the main challenge in the design of “request to intervene”, is to avoid the targeting of autonomous agents as easy scapegoats for human errors33, as well as to circumvent the “moral crumple zone”34, namely the cases when the human operator is wrongly held responsible. In this sense, it is crucial for legislators to define a proper strategy of AI governance to protect human beings while implementing future-proof regulations without hindering technological innovation.

4.

Industry 4.0, Machine Vision and Artificial Intelligence ^

The expression “Industry 4.0” is quite new. At the Hanover Fair in 2011, the term “Industry 4.0” was popularized for the first time35. Curiously, it was created to define the upcoming industrial revolution, that being the first time that a revolution had been defined before its happening. Such a paradox is only apparent, since our societies are increasingly aware of the effects of technological innovation, especially about speed of changes, scale of impact, depth of transformation36. Furthermore, reports from the World Economy Forum (WEF) show that this set of technologies will be crucial in addressing current needs of reducing energy consumption and increasing environmental sustainability of current industrial production. In this respect, many Countries have already adopted specific strategies to pursue these goals37, likewise the European Union is rooting on them to foster the economic growth and the resilience of the internal market after the COVID19 pandemic38.

The social impact expected from the advent of Industry 4.0 is still under scrutiny, although experts agree that it is useful to consider some crucial factors, which are esteemed to be even more pivotal when the pandemic will end and hopefully investments will return to flow regularly39.

First, the impact of automation on the labour market is still uncertain. International competition and low labour costs require a strategy aimed at obtaining the best results with the savviest use of resources. Companies are re-inventing their business models and strategies to increase automation, augmented communication, and self-monitoring. Hence, they are planning to deploy systems that can diagnose their limits and blockages without human intervention.

Secondly, the need to develop strategic public-private partnership to foster digital innovation. Since it is still unknown the impact of an increasingly complex industrial model, where humans and machines combine their efforts, it is not enough to create just monitoring software, but it is important to develop shared strategies to steer the transition to Industry 4.0 in a sustainable way.

Third aspect: total quality and safety. Incredibly, in many industries some activities carried out for the quality control are entrusted to workers that are required to check semi-finished or finished products even for long working shifts. In past steel industry, for example, workers had to get close to burning metal to detect and report any defect, so exposing themselves even to deadly risks. Implementing quality industrial control was meant not only for marketing a valuable product, but also to monitor the manufacturing processes, hence an imperfection of the final product can reveal malfunctions that can put workers at risk. With current information technologies it is possible to implement automated quality control systems, enabling a proactive approach which prevents failures in the workflow, as well as allows real-time data exchange with the productive ecosystem.

The importance of these three aspects combined can be exemplified by considering the deployment of machine vision and artificial intelligence in “Industry 4.0”. Indeed, when implementing AI-supported machine vision systems in an industrial plant, it’s essential to seed it into an IoT platform40 that allows human workforce to monitor – even remotely – the manufacturing processes and supports the analysis of the data generated by machines. In this sense, these functions are greatly simplified if the interfaces are intuitive, flexible, and usable. Of course, it can be claimed that human oversight can be easily replaced by artificial agents especially in repetitive tasks, which are more likely to be afflicted by human errors due to operator fatigue. In this sense it can be said that cases the monitoring tasks, which ordinarily are performed by human operators, become integrated directly into the system. In this scenario, the concept of “work” needs to be reframed. Notably, sociologist Neil Postman, who studied the changes in language related to the modification induced by each new technology41, argues that today “work” certainly has a different meaning than in the past. Indeed, through the introduction of automation systems and AI into industries, many repetitive, dangerous, or demanding jobs have been delegated to machines. These are activities in which workers are required to classify objects, count them, or check for flaws. It is known that the introduction of these systems has been interpreted by some as a threat to work; this phenomenon is called “neo-luddism”42 and it expresses a sense of rejection for machines, seen as a threat to their workplaces. But, counting iron bars in a dangerous plant is perhaps an activity that makes sense to still be performed by humans?43 The displacement of workers, however, should not be seen just as a side effect of the development of autonomous systems, but a primary challenge to be addressed by a proper AI governance strategy, which goes beyond the simplistic approach summarized in this acronym HABA-MABA (“Humans are better at/Machines are better at”) which has based many initiatives until today44.

Therefore, “Industry 4.0” is paradigmatic of the many challenges and opportunities raised by technological design. As Baumer and Silberman argue: “Being skeptical about technology does not mean rejecting it” but “the argument here is that when we do build things, we should engage in a critical, reflective dialog about how and why these things are built”45. Indeed, if it is true that the purpose of manufacturers is to produce marketable products which can articulate an ecosystem of services, the adoption of autonomous system can relieve humans from physical threats and increase productivity, hence it is an opportunity that should be considered with favour.

Concluding on this topic, it could be argued that “Industry 4.0” is not the destination of technological progress, but a further step towards the unknown and, furthermore, a concept intrinsically limited. In 2019 Gerd Leonhard introduced the neologism “androrithm”46 to indicate everything that cannot be converted into algorithms, which means the activities in which humanity has a unique and irreplaceable value, as a sign of a civilized and evolved society free from those activities that can be performed by machines. We should become aware that just because fortunately machines will respond to most human productive needs, humanity will have to rethink its role in the universe and perhaps also understand that without human consumption and needs, production has no meaning47. Human resources are the most valuable asset in any working context and can be used for purposes that are more satisfying for workers and more interesting for companies, being able to eliminate mortifying jobs that do not express the value of workers. This is not a loss but a real achievement only if we are able to discuss the true meaning of the word “work”.

5.

Conclusions ^

The “request to intervene” epitomises, under a practical perspective, the theoretical issue of balancing human needs and AI potentials which recently have been widespread as “Human-centric AI”. Paradoxically, at the centre of the model here proposed it is placed not the figure of a human being, but an abstract concept such as that of design. This is somehow truth-revealing, since it represents the current risks of replacement of humans by AI, which can be considered the most advanced form of creativity artefact ever created.

According to such a perspective, we can argue that, among the many challenges to be faced in the coming years, the most crucial is to adopt AI technologies not to replicate passively current industrial mass-production, but to re-invent economic models to optimise sustainability in a proactive way. This is the most important challenge for AI governance. Therefore, it should be clear, at least in the academic debate, that social acceptance and trust in technology are indeed crucial factors, although they should neither be confused nor traded with the respect of fundamental rights and individual freedoms. The re-invention of the concept of “work”, in this sense, is an unavoidable consequence, which would likely require a long period of adaptation and even new technological solutions, during which it is important not to miss its inevitable link with human dignity.

- 1 This contribution is the result of joint research of the co-authors. Individual contributions can be attributed as follows: Federico Costantini, paragraphs 1 and 2; Lorenzo Genna, paragraph 3, Giuseppe Parisi, paragraph 4.

- 2 Finnis, Natural law and natural rights, Clarendon Press, Oxford, 1980.

- 3 Kelsen, Reine Rechtslehre. Einleitung in die rechtswissenschaftliche Problematik, Deuticke, Leipzig und Wien, 1934.

- 4 Ehrlich, Grunhlegung der Soziologie des Rechts, von Eugen Ehrlich, Duncker und Humblot, München, 1913.

- 5 Wiener, Cybernetics or control and communications in the animal and the machine, Actualités scientifiques et industrielles, 1053, Hermann & Cie-The Technology Press, Paris-Cambridge, 1948.

- 6 Luhmann, The Autopoiesis of Social Systems. In: Geyer F., van der Zouwen J. (Eds.), Sociocybernetic Paradoxes, Sage, London, 1986, p. 172–192.

- 7 Teubner, Recht als autopoietisches System, Suhrkamp, Frankfurt am Main, 1989.

- 8 Gleich, The Information: A History, a Theory, a Flood, HarperCollins, New York, 2012.

- 9 Reno v. American Civil Liberties Union, 521 U.S. 844 (1997), https://supreme.justia.com/cases/federal/us/521/844/.

- 10 Lessig, The Zones of Cyberspace, Stanford Law Review, 48, 5, 1996, p. 1403–1411.

- 11 Reidenberg, Lex Informatica: The Formulation of Information Policy Rules through Technology, Texas Law Review, 76, 1998, p. 553–593.

- 12 Easterbrook, Cyberspace and the Law of the Horse, University of Chicago Law School, 207, 1996, p. 206–216.

- 13 De Filippi, Blockchain and the Law: The Rule of Code, Harvard University Press, Harvard, 2018.

- 14 Luhmann, Vertrauen. Ein Mechanismus der Reduktion sozialer Komplexität, Enke, Stuttgart, 1968.

- 15 See among many contributions Coeckelbergh, AI Ethics, The MIT Press, Cambridge (MA), 2020, Dignum, Responsible Artificial Intelligence. How to Develop and Use AI in a Responsible Way, Springer, Cham, 2019.

- 16 Floridi, The Philosophy of Information, Oxford University Press, Oxford, 2013.

- 17 Costantini/Galvan/De Stefani/Battiato, Assessing “Information Quality” in IoT Forensics: Theoretical Framework and Model Implementation, Journal of Applied Logics – IfCoLog Journal of Logics and their Applications, 8, 9, 2021, p. 2373–2406.

- 18 Proposal for a Regulation (EU) Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain union legislative acts, COM/2021/206 final, https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206.

- 19 Floridi, The logic of information. A theory of philosophy as conceptual design, Oxford University Press, Oxford-New York, 2019.

- 20 Winkler/Spiekermann, Twenty years of value sensitive design: a review of methodological practices in VSD projects, Ethics and Information Technology, 23, 1, 2018, 17–21.

- 21 VSD is defined as „a theoretically grounded approach to the design of technology that accounts for human values in a principled and comprehensive manner throughout the design process“, Friedman/Kahn/Borning/Huldtgren, Value Sensitive Design and Information Systems, in: Early engagement and new technologies: Opening up the laboratory, Doorn/Schuurbiers/van de Poel/Gorman (eds.), Springer Netherlands, Dordrecht, 2013, 55–95, p. 56. Some of the contributors are pioneers of the discipline, see Friedman, Value-sensitive design, in Interactions, 3, 6 1996, 16–23. This methodology consists in the adoption of three distinct analyses: the first (conceptual dimension) concerns the identification of the subjects and values involved and the conflicts between them generated by the technology; the second (empirical dimension) concerns the observation of the social context of reference and therefore of the social organisation in which the technology is inserted; the third (technical dimension) pertains to the way in which the technological device must be conceived or designed to respect the values set as binding.

- 22 „RRI is a transparent, interactive process by which societal actors and innovators become mutually responsive to each other with a view on the ethical acceptability, sustainability and societal desirability of the innovation process and its marketable products (in order to allow a proper embedding of scientific and technological advances in our society)“ von Schomberg, Prospects for technology assessment in a framework of responsible research and innovation, in: Technikfolgen abschätzen lehren: Bildungspotenziale transdisziplinärer Methoden, Dusseldorp/Beecroft (eds), VS Verlag für Sozialwissenschaften, Wiesbaden, 2012, 39–61. For an alternative approach, see Stilgoe/Owen/Macnaghten, Developing a framework for responsible innovation, in Research Policy, 42, 9, 2013, 1568–1580. This is the approach adopted by the European Union since the Horizon2020 research programmes and involves a process of participatory design of technological devices, Boenink/Kudina, Values in responsible research and innovation: from entities to practices, in Journal of Responsible Innovation, 7, 3, 2020, 450–470.

- 23 Morley/Floridi/Kinsey/Elhalal, From What to How: An Initial Review of Publicly Available AI Ethics Tools, Methods and Research to Translate Principles into Practices, in Science and engineering ethics, 26, 4, 2020, 2141–2168.

- 24 Winfield, Ethical standards in robotics and AI, in Nature Electronics, 2, 2, 2019, 46–48.

- 25 According to Moor, artificial Agents should be divided into four classes, see Moor, The Nature, Importance, and Difficulty of Machine Ethics, in IEEE Intelligent Systems, 21, 4, 2006, 18–21.

- 26 Eitel-Porter, Beyond the promise: implementing ethical AI, in AI and Ethics, 1, 1, 2020, 73–80.

- 27 LHCA describes how an operator controls a system based on the tasks performed and the level of detail of decisions made by the operator. It spans from level 1, in which operator controls each aspect of the system, to level 5, in which operator enters pre-launch mission goals and the system operates independently and autonomously. LHCA level 3 is named “parametric control’. In it, parameters are inserted by the human operator inputs parameters while the system is expected to comply with using its sensors and algorithms. The oversight – and its consequent responsibility – is maintained by the operator, Johnson/Miller/Rusnock/Jacques, A framework for understanding automation in terms of levels of human control abstraction, in IEEE International Conference on Systems, Man and Cybernetics (SMC), Banff, AB, 2017, 1145–1150, doi: 10.1109/SMC.2017.8122766.

- 28 Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles, see Shi/Gasser/Seeck/Auerswald, The Principles of Operation Framework: A Comprehensive Classification Concept for Automated Driving Functions, in SAE International Journal of Connected and Automated Vehicles, 3, 1, 2020, 27–37.

- 29 Lewis/Sycara/Walker, The Role of Trust in Human-Robot Interaction, in: Abbass/Scholz/Reid, Hoffman et al, Foundations of Trusted Autonomy, 2013, 135–159.

- 30 In order to manage automation, new procedures are required, Hawley, “Not By Widgets Alone”, in Armed Forces J., Feb. 2011, http://armedforcesjournal.com/not-by-widgets-alone/ (accessed 15 December 2021).

- 31 Lee/Moray, Trust, control strategies and allocation of function in human-machine systems, in Ergonomics 1992, 1243–1270.

- 32 Inagaki/Thomas, A critique of the SAE conditional driving automation definition, and analyses of options for improvement, Cognition, Technology & Work, 21, 2018, 569–578.

- 33 Nissenbaum, Accountability in a computerized society, in Science and Engineering Ethics, 2, 1996, p. 25–42.

- 34 Elish, Moral Crumple Zones: Cautionary Tales in Human-Robot Interaction, in Engaging Science, Technology, and Society, 5, 2019, 40–60.

- 35 Liao/Deschamps/de Freitas Rochas Loures/Ramos, Past, present and future of Industry 4.0 – a systematic literature review and research agenda proposal, in Int. J. Prod. Res. 55, 2017, 3609–3629.

- 36 Harari, Homo Deus, HarperCollins Publishers, New York, 2016.

- 37 „Germany is the preemptor for Industry 4.0 policy; followed by the U.S., which proposed the Advanced Manufacturing Partnership; China, which drafted China Manufacturing 2025; and Taiwan, which drew up Taiwan Productivity 4.0. Since Industry 4.0 will trigger the next wave of industrial competition, it is necessary for nations to arrange development strategies to confront incoming challenges“, Lin Kuan/Shyu/Ding, Kung, A Cross-Strait Comparison of Innovation Policy under Industry 4.0 and Sustainability Development Transition, in Sustainability, 9, 5, 786, www.mdpi.com/journal/sustainability,(accessed on September 2021).

- 38 Council Regulation 2020/2093 of 17 December 2020 laying down the multiannual financial framework for the years 2021 to 2027, OJ L 433I, p. 11–22, 2020.

- 39 Coombs, Will COVID-19 be the tipping point for the Intelligent Automation of work? A review of the debate and implications for research, in: International Journal of Information Management, 5, 2020.

- 40 For an example of a machine operation monitoring system, see: https://www.beantech.it/en/technologies/brainkin/.

- 41 Mason, Science Fiction in Social Education. Exploring Consequences of Technology, in Social Education, 77, 3, 2013, 132–134 (156).

- 42 Glendinning, Notes toward a Neo-Luddite Manifesto, 1990, The Anarchist Library, https://theanarchistlibrary.org (accessed on October 2021).

- 43 See an innovative bar counting system in https://www.youtube.com/watch?v=cP65MLg4wJ0.

- 44 Fitts, Human Engineering for an Effective Air Navigation and Traffic Control System, by the National Academy of Sciences, Washington, 1951.

- 45 Baumer/Silberman, When the implication is not to design (Technology), proceedings of the SIGCHI conference on human factors in computing systems, ACM, Vancouver, 2011, 2271–2274.

- 46 See: https://www.futuristgerd.com/2016/09/what-are-androrithms/.

- 47 Ferraris, Documanità, Editori Laterza, Roma, 2021.