1.

Introduction ^

Artificial Intelligence (AI) has emerged as one of the big technological drivers for new developments, shaping a whole industry of data-driven applications, but also leaving an impressive footprint in the traditional economy. Myriads of new applications, such as smart devices or predictive maintenance, enabled better decision-making and a wide range of innovations. Furthermore, these decisions start to get deeply ingrained into everyday lives, often invisible to the citizens, ranging from personal recommender systems providing information specifically tailored to the interests of the reader, thus leading to information bubbles, to in-depth analysis of consumer behavior and even to predictive policing.

Beside these trends, experts see AI as one of the key technologies1 to solve the grand societal challenges represented by the UN sustainability goals2, e.g. for smart farming and smart cities. Furthermore, AI is seen as a critical success factor for business to stay competitive, efficient, and effective.3 This positive outlook tempts companies to invest in this new technology.

However, as AI adoption increases, the dependency of society and economy on AI systems rises as well. At the same time, existing challenges (e.g. explainability) remain and new ones (e.g. increasing threat landscape) arise. Incidents in the past impressively demonstrated the extend of the danger, like attacks against the self-driving capabilities of cars or biases in analytics systems for predictive assignment of police forces. These dangers are especially problematic with respect to critical infrastructures, where even short interruptions can cause damage to citizens and/or the economy of a member state.

Therefore, the European Commission proposed an AI regulation (the “Artificial Intelligence Act”4) which aims at addressing the aforementioned challenges. But what are these challenges exactly?

To answer this question, we would first have to define “Artificial Intelligence” or at least the key criteria of the term. This, however, might proove difficult, seeing as various national AI strategies expressly state that there is no single commonly accepted definition of AI or one that is consistently used by all stakeholders.

Therefore, this contribution investigates the legal definition of AI within the proposed AI Act, sets it in contrast to already existing definitions of AI and challenges its suitability to address the challenges that the AI Act aims to address – as well as asking the question: “What is AI?”

2.

The AI Act and “Artificial Intelligence” ^

In any attempt to regulating Artificial Intelligence, a clear definition of the term is paramount. However, the term “Artificial Intelligence” is still shrouded in mystery. Although there is consensus on the potential impact of AI in society, it appears that the criteria that define AI are not yet clear. Moreover, it seems that many authors and scientist have their own idea on what defines AI. This also becomes apparent in the new proposal of the AI regulation. This section explores the different ideas behind “AI” and what it means, drawing from the groundworks of the OECD, which are also referred to in the impact assessment of the current Proposal for an “Artificial Intelligence Act” (hereinafter: “the Proposal”)5.

In the OECD conference “AI: Intelligent Machines, Smart Policies” in 2017, various experts shared their idea of what defines AI and what risks and challenges AI poses.

Slusallek defined AI as “systems that are able to perceive, learn, communicate, reason, plan and simulate in a virtual world and act in the real world, i.e. AI simultaneously understands the real world by learning models or the ‘rules of the game’ and finds the best strategies to act given these models of reality.”6

A different approach was taken by Bryson, who defined intelligence as doing the right thing at the right time and noted that AI is an artefact that is deliberately created by humans, for which someone is responsible and that involves computation – a physical process requiring energy, time and space.7 So according to Bryson, the first AI artefact in human history was “writing” as a way to store ideas that triggered exponential development of humans, that intelligence allowed communication and agility and the discovery of new equilibria of mutual benefits. These seemingly different approaches (at the very same conference) are exemplary for the fact that a precise definition of “AI” is still highly debated.8 This led to the more recent attempt to find a commonly applicable definition of AI, by reviewing existing definitions of AI from relevant works from 1955 to 2019.9

The High-Level Expert Group of AI (herein: HLEG) provided the following baseline-definition of AI:

“Artificial intelligence (AI) systems are software (and possibly also hardware) systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information, derived from this data and deciding the best action(s) to take to achieve the given goal. AI systems can either use symbolic rules or learn a numeric model, and they can also adapt their behaviour by analysing how the environment is affected by their previous actions”10

It was acknowledged that since this definition is highly technical and detailed, less specialised definitions can be adopted for studies of different objective, such as enterprise surveys.11

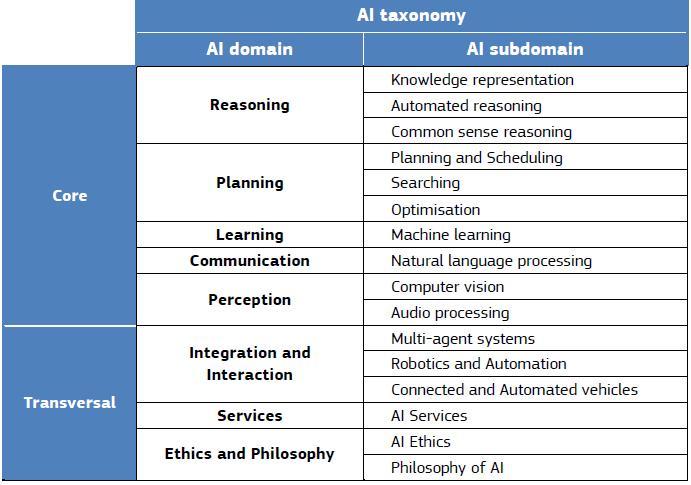

Table 1 – AI Taxonomy12

Although these works are mentioned in the Impact Assessment for the Proposal13, the problem of defining AI is only mentioned once and there is no further elaboration on the topic. Moreover, references to other current developments on the EU-level to address the changes that digitalisation has brought seem to indicate that there are different views even within the Commission. The reference to the current discussion on a possible renewal of the Product Liability Directive (PLD14), for example, only furthers the confusion:

“It is unclear whether the PLD still provides the intended legal certainty and consumer protection when it comes to AI systems and the review of the directive will aim to address that problem. Software, artificial intelligence and other digital components play an increasingly important role in the safety and functioning of many products, but are not expressly covered by the PLD.” 15

Reading this, one would have to question whether it was in the intention of the commission at all to differentiate between “software, artificial intelligence and other digital components”. However, Recital 6 of the proposed AI Act states that “[t]he notion of AI system should be clearly defined to ensure legal certainty, while providing the flexibility to accommodate future technological developments. [...]”.16

Rec. 6 then goes on to give its own definition of the key characteristics of AI:

“[...] The definition should be based on the key functional characteristics of the software, in particular the ability, for a given set of human-defined objectives, to generate outputs such as content, predictions, recommendations, or decisions which influence the environment with which the system interacts, be it in a physical or digital dimension. AI systems can be designed to operate with varying levels of autonomy and be used on a stand-alone basis or as a component of a product, irrespective of whether the system is physically integrated into the product (embedded) or serve the functionality of the product without being integrated therein (non-embedded). The definition of AI system should be complemented by a list of specific techniques and approaches used for its development, which should be kept up-to–date in the light of market and technological developments through the adoption of delegated acts by the Commission to amend that list.”17

These key-criteria are also reflected in Art. 3 (1) of the Proposal, which also includes the proposed legal definition of an “artificial intelligence system”:

“‘[A]rtificial intelligence system’ (AI system) means software that is developed with one or more of the techniques and approaches listed in Annex I and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.”

Annex I of the Proposal then defines these techniques and approaches as follows:

“ARTIFICIAL INTELLIGENCE TECHNIQUES AND APPROACHES referred to in Article 3, point 1

(a) Machine learning approaches, including supervised, unsupervised and reinforcement learning, using a wide variety of methods including deep learning;

(b) Logic- and knowledge-based approaches, including knowledge representation, inductive (logic) programming, knowledge bases, inference and deductive engines, (symbolic) reasoning and expert systems;

(c) Statistical approaches, Bayesian estimation, search and optimization methods.”

At first glance, one could conclude that this definition achieves the goal to include all the relevant techniques and approaches that are generally associated with AI. But on a second glance, we discover certain problems within this definition.

First, it should be noted that this definition differs from the one that HLEG for AI used as a baseline definition of AI, as according to Art. 3(1) of the Proposal, AI generates outputs which influence environments they interact with, but it does not “adapt their behaviour by analysing how the environment is affected by their previous actions”. This makes the definition in the proposal comparatively broad.

Second, the broadness of this definition is not in any way remedied by the inclusion of “certain techniques and approaches” in Annex 1, as the Annex does not just include specific technologies but rather a wide range of approaches. While lit. a is focused on machine learning approaches, the approaches mentioned in lit. b could – in a strict sense of the word – include knowledge graphs, any algorithm that includes Boolean logic or loops and even any searchable database (e.g., common data warehouses built on SQL- and NoSQL-database management systems like ORACLE, Neo4J, DB2, MySQL ...). Similarly, lit. c would also include technologies which are far from being commonly associated with AI, such as significance calculations, chi-square, f-score, precision, r2, MSE, MAE or other functions that are commonly used in scientific studies. One could even argue that any search function (even those implemented in the very organisation of computer memory or file systems on hard discs in every computer system) falls under lit c.

The way “Artificial Intelligence” is defined in the Proposal of the AI Act, it becomes quite clear that many of the commonly used technologies fall under the definition, even those that do not pose any of the challenges that the regulation addresses such as opacity, complexity, “biases” or unpredictability.18

Of course, one could argue that Artificial Intelligence according to Art. 3(1) of the proposed AI Act still requires that AI is “influencing the environments they interact with”. One could further argue that this influence must reach a certain threshold and that this influence is the key factor in defining AI. In this way, the broad definition of AI could be narrowed to those cases which have an expected impact on a natural person. In this risk-based-approach, Artificial Intelligence would be mainly defined by its impact or its risk thereof. However, a systematic interpretation leads to a different conclusion. Most of the proposed Artificial Intelligence Act’s obligations19 are directed at High-Risk AI20.

It therefore seems a valid conclusion that it was not the intention of the Commission to have the scope of “Artificial Intelligence” according to Art. 3(1) be understood in the narrowed sense proposed above, seeing as most of the obligations would be directed at providers of High-Risk-AI. The definition of High-Risk-AI is already narrowed down and is oriented on the potential impact of the AI, which would make the same narrowed reading of “normal” AI redundant.21

3.

What is “Artificial Intelligence”? ^

In the section above, we have elaborated on the broad definition of AI within the Proposal of the AI Act. Although the legal definition in the proposal has already been put into contrast with some definitions that are referenced either in the Proposal or in the Impact Assessment, it is worth taking a closer look on how Artificial Intelligence is understood in both academia as well as national context. This section will show that “intelligence” (albeit artificial) is an essential part of “artificial intelligence” in the academic discussion. It will also contrast the broad definition in Art. 3(1) of the Proposal with the official view of national authorities, seeing as it might generate further problems when having to align national AI strategies with the AI Act.

3.1.

“Artificial Intelligence” in academia ^

When researching a definition of AI, it becomes clear rather quickly that there is no easy way to define such a concept. Most attempts to do so divert to the question of what intelligence is as well as how to define intelligence and how to make such a definition applicable to a machine. The question of when a system can be considered intelligent comes up frequently. Roger C. Schank22 mentions four viewpoints on a definition of Artificial Intelligence, one of which is having a machine do things, thought impossible for machines to achieve. This is an attempt at a definition that in some variations can be found rather often, but is directly founded on (personal) expectations, thus being problematic as a basis for legal liabilities. Most works that try to define AI use S. Russel and P. Norvig’s Book23 as a basis. In their work Russel and Norvig define AI by giving four different approaches. These are acting humanly, thinking humanly, thinking rationally and acting rationally.

As a basis of determining if a computer is acting humanly, the Turing Test proposed by Allen Turing in the 1950’s is used. To pass the normal Turing test, a computer needs to possess the first four of the following abilities, for the stricter so-called “Total Turing Test” all six abilities are required:

- Natural language processing – in order to be able to communicate in a human understandable way

- Knowledge representation – as it needs a way to store what it knows

- Reasoning – for it to make use of the stored knowledge

- Machine learning – to recognize patterns and circumstances to adapt its behavior accordingly

- Computer vision – to handle either images or objects within its surroundings

- Robotics – to move through its surroundings or objects within it

Interestingly these are the fields, most commonly referred to when speaking about AI. Both research as well as commercially used AI-solutions usually fall into one of these categories.

For this the underlying approach is to try and understand the way humans think (“thinking humanly”). Russel and Norvig list three possible ways of attempting this. Either through introspection, observation or imaging of brain activity. The focus it not so much in the outcome of a model but rather on the process a machine displays to arrive at its conclusions, as that is what is trying to be modelled.

To make a program capable of thinking rationally, logic is used. Logic in a formal sense or rather as a scientific field uses a precise notation for statements of all kinds involving any object within our world or relations between them. The basis of logic are the syllogisms of Aristoteles, for which holds true that given correct premises, a correct conclusion can be drawn. According to Russel and Norvig, this approach comes with two problems. The first is the difficulty of having less than 100% certain information and getting it into a state where it can be noted in formal terms required by the field of logic24. The second is that as problems get more complex, the computing power needed to solve them using logic also grows. This is caused by the exhaustive approach of following a logical line of argument, where every possibility must be explored as a program does not know where to start.

“Acting rationally” is where all the before mentioned approaches come together in a way, to create an agent that is capable of using logic to come to a correct conclusion and therefore infer which decision to make but can also use experience through learning from changes in the environment25. Although not specifically mentioned by Russel and Norvig, this is what is used in the field of reinforcement learning, where an agent is given a goal and through information provided by its environment, like the state the agent and the environment are currently in as well as possible actions the agent can take. With the help of reward and penalty the agent learns, how to achieve its goal. So, an agent needs to store knowledge, learn from experience, communicate, and gather information about its environment to draw conclusions.

Although they do not mention Russel and Norvig, the definition provided by the EU Commission’s High-Level Expert Group on Artificial Intelligence (HLEG)26 appears to have been inspired by them as there are a lot of congruencies in both definitions.

3.2.

National Strategies ^

Within the European Union, there are also various National Strategies on Artificial Intelligence that show the understanding of the respective government on what constitutes AI. It is telling that all the National Strategies the authors reviewed, agree that there is no commonly accepted definition of Artificial Intelligence.27

The Government of Austria has its own definition of AI for the purposes of the Strategy according to which AI describes “computer systems that exhibit intelligent behavior, i.e., that are capable of performing tasks that in the past required human cognition and decision-making skills. Artificial intelligence-based systems analyse their environment and act autonomously to achieve specific goals.”28

Within the German AI Strategy, the German Government differentiates between “weak” (focused on solving concrete application problems [...], whereby the developed systems are capable of self-optimization”) and “strong” (at least the same intellectual skills as humans).29 This is achieved by emulating or formally describing aspects of human intelligence or constructing systems to simulate and support human thinking.30 The examples of weak AI given in the strategy i.a. include machine learning [...]; autonomous control of robotic systems [...] intelligent multimodal human-machine interaction.”31

The Government of the United Kingdom defines AI as “machines that perform tasks normally requiring human intelligence, especially when the machines learn from data how to do those tasks.”32 According to this definition, Artificial Intelligence is defined by the fact that it aims to replace human intelligence within a specific task. It is worth noting, however, that the UK government has also set out a different definition of AI in the UK National Security and Investment Act, which is different to the one above “due to the clarity needed for legislation”, as the UK Government states.33 According to this definition, “‘artificial intelligence’ means technology enabling the programming or training of a device or software to (i) perceive environments through the use of data; (ii) interpret data using automated processing designed to approximate cognitive abilities; (iii) make recommendations, predictions or decisions; with a view to achieving a specific objective.”34

Furthermore, “cognitive abilities” is defined as “reasoning, perception, communication, learning, planning, problem solving, abstract thinking or decision making”. This means that according to this definition the “intelligence” requirement in AI is represented by the requirement to “approximate cognitive abilities”.

The Swiss AI Strategy35 builds on an extensive Report of the Working Group “Artificial Intelligence”36, which has its own definition of AI. This definition of AI stands out in that it actively avoids drawing any comparison to human intelligence in it, since this would require human intelligence to be defined, which also proves difficult. The report also highlights that the current sensational developments of AI primarily originate from methods in which computers learn autonomously (machine learning).37

The AI Strategy of France “AI for Humanity” does not define AI by its properties, but by its objectives when it states that “[o]riginally, it sought to imitate the cognitive processes of human beings. Its current objectives are to develop automatons that solve some problems better than humans, by all means available.”38

4.

Conclusion ^

Although there is no universally accepted definition of AI, this contribution has shown that the legal definition of AI in the current Proposal of the Artificial Intelligence Act by the European Commission greatly diverts from various national definitions by Member States, as well as from widely held opinion in the academic world.

The Definition of AI within the Proposal neither requires any approximation of “cognitive abilities” or the emulation of human intelligence (at least for completing specific objectives – weak AI) nor is it confined to machine learning methods. Art. 3(1) and Annex 1 of the Proposal therefore not just include techniques and approaches that are commonly accepted as AI, but also common basic algorithms that are comparatively simple and ubiquitous in IT. One might even argue that according to this definition, any algorithm containing if/else statements, or a search routine could be qualified as AI.

And while some might argue that the definition of Artificial Intelligence in Art. 3(1) of the Proposal has – at least from a legal perspective – barely any negative consequence for the provider since the bulk of obligations are solely directed at the provider of so called “High-Risk-AI”, the definition of Artificial Intelligence nevertheless has legal consequences: the Proposal, for example, includes the provision of certain AI sandboxes, which allow the processing of personal data for the purposes of developing “Artificial Intelligence”. In this regard, the scope of AI according to Art. 3(1) has a direct Human Rights39 implication.

With this contribution the authors aim to further the discussion on the scope of the current Proposal of the Artificial Intelligence Act and advocate a narrower definition of AI therein that specifically addresses the dangers and problems associated with AI, as outlined within its impact assessment. The question of what AI is, should therefore be answered individually for the specific purpose of the definition (e.g. to address specific dangers of certain technologies) and in such a way that it allows an emotionless and scientific debate.

- 1 See Presidency of the Committee of Ministers of the Council of Europe/Council of Europe, Current and Future Challenges of Coordinated Policies on AI Regulation – Conclusions, https://rm.coe.int/26-oct-ai-conf-hu-presidency-draft-conclusions-en/1680a44daf (visited 22 November 2021).

- 2 Chui, Michael, Chung, Rita, Van Heteren, Ashley, Using AI to help achieve Sustainable Development Goals, https://www.undp.org/blog/using-ai-help-achieve-sustainable-development-goals (visited 22 November 2021).

- 3 Tran, T., Trank, N., Felferning, A., Trattner, C., Holzinger, A., Recommender systems in the healthcare domain: state-of-the-art and research issues, Journal of Intelligent Information Systems 57/1, 2021, p. 171–201.

- 4 See below.

- 5 Proposal for Regulation Of The European Parliament And Of The Council Laying Down Harmonised Rules On Artificial Intelligence (Artificial Intelligence Act) And Amending Certain Union Legislative Acts, COM/2021/206 final.

- 6 Organisation for Economic Co-operation and Development OECD, AI: Intelligent, Machines, Smart Policies – Conference Summary, OECD Digital Economy Papers, https://www.oecd-ilibrary.org/science-and-technology/ai-intelligent-machines-smart-policies_f1a650d9-en, (visited 24 November 2021) p. 11.

- 7 Ibid., p. 26.

- 8 European Commission, Commission Staff Working Document Impact Assessment Accompanying the Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules On Artificial Intelligence (Artificial Intelligence Act) And Amending Certain Union Legislative, SWD (2021) 84 final, Part 1/2, p. 2 (hereinafter: “Impact Assessment Accompanying the Proposal”).

- 9 See Samoili, S., López Cobo, M., Gómez, E., De Prato, G., Martínez-Plumed, F., Delipetrev, B., AI Watch. Defining Artificial Intelligence – Towards an operational definition and taxonomy of artificial intelligence, Luxembourg 2020, p. 2.

- 10 High-Level Expert Group on Artificial Intelligence, A Definition of AI: Main Capabilities and Disciplines, 2019, https://digital-strategy.ec.europa.eu/en/library/definition-artificial-intelligence-main-capabilities-and-scientific-disciplines (visited 25 November 2021), p. 8; this definition is used as well in High-Level Expert Group on Artificial Intelligence, Ethics Guidelines for Trustworthy AI, 2019.

- 11 Samoili, S., López Cobo, M., Gómez, E., De Prato, G., Martínez-Plumed, F., Delipetrev, B., AI Watch. Defining Artificial Intelligence – Towards an operational definition and taxonomy of artificial intelligence, Luxembourg 2020, p. 9.

- 12 Ibid., p.11 and 16–17 On the basis of this definition, the HLEG then proceeded to find an “operational” definition of AI, wherein the core elements of AI were deduced and represented via a list of subdomains (see Table 1) followed by a list of keywords that best represent each subdomain.

- 13 European Commission, Impact Assessment Accompanying the Proposal (FN 8), SWD (2021) 84 final, Part 1/2, p. 1.

- 14 Council Directive 85/374/EEC of 25 July 1985 on the approximation of the laws, regulations and administrative provisions of the Member States concerning liability for defective products, OJ L 1985/210, p. 29.

- 15 European Commission, Impact Assessment Accompanying the Proposal (FN 8), SWD (2021) 84 final, Part 1/2, p. 8, FN. 49.

- 16 Rec. 6 Prosposal, emphasis added.

- 17 Ibid., emphasis added.

- 18 See Proposal – Explanatory Memorandum, p. 7 and European Commission, Commission Staff Working Document Impact Assessment Accompanying the Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules On Artificial Intelligence (Artificial Intelligence Act) And Amending Certain Union Legislative, SWD (2021) 84 final, Part 1/2, p. 13 ff.

- 19 Title III addresses exclusively High-Risk AI Systems (and contains Art. 6–51 of the Proposal of the AI Act).

- 20 High-Risk AI systems are defined in Art. 6 of the Proposal.

- 21 There are still legal consequences connected to AI, which we address further below.

- 22 Schank, Where’s the AI?, AI Magazine 12/4, AAAI, USA 1991, p. 38.

- 23 Russel, Norvig, Artificial intelligence: a modern approach3, Pearson Education, USA 2010.

- 24 It must be noted though that there are methods like non-monotonous logics (e.g. default logics) that focus on solving this issue and provide a more intuitive way of thinking.

- 25 More advanced methods of e.g. reinforcement learning actually differentiate between changes caused by the agent itself and external sources.

- 26 High-Level Expert Group on Artificial Intelligence, Ethics guidelines for trustworthy AI, 2019.

- 27 Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology/Federal Ministry for Digital and Economic Affairs of Austria, Strategie der Bundesregierung für Künstliche Intelligenz, Artificial Intelligence Mission Austria 2030 Austrian Strategy, 2021, p. 16; Government of Germany, Strategie Künstliche Intelligenz der Bundesregierung, AI made in Germany, 2020, p. 4; HM Government (UK), National AI Strategy, 2021, p. 16.

- 28 Ibid., this is based on the definition of the Austrian Council on Robotics and Artificial Intelligence (ACRAI), Shaping the Future of Austria with Robotics and Artificial Intelligence – Whitepaper, 2018, https://www.acrai.at/wp-content/uploads/2020/03/ACRAI_White_Paper_EN.pdf (visited 25 November 2021).

- 29 Government of Germany, Strategie Künstliche Intelligenz der Bundesregierung, AI made in Germany, 2020, p. 4.

- 30 Ibid.

- 31 Ibid.

- 32 HM Government (UK), National AI Strategy, 2021, p. 16.

- 33 Ibid., FN 2.

- 34 See National Security and Investment Act 2021, https://bills.parliament.uk/bills/2801/publications (visited 24th November 2021).

- 35 See Swiss Federal Council, Leitlinien “Künstliche Intelligenz” für den Bund (“Guidelines for AI”), 2020.

- 36 Interdepartmental Working Group “Artificial Intelligence”, Herausforderungen der künstlichen Intelligenz („Challenges of Artificial Intelligence“), 2019.

- 37 See Interdepartmental Working Group “Artificial Intelligence”, Herausforderungen der künstlichen Intelligenz („Challenges of Artificial Intelligence“), 2019, p. 19.

- 38 See Villani, For a Meaningful Artificial Intelligence Towards a French and European Strategy, 2018, p. 4.

- 39 I.e., the Right to Data Protection; Art. 8 Charter of Fundamental Rights (CFR).